Speech & Audio

Audio source separation, recognition, and understanding.

Our current research focuses on application of machine learning to estimation and inference problems in speech and audio processing. Topics include end-to-end speech recognition and enhancement, acoustic modeling and analysis, statistical dialog systems, as well as natural language understanding and adaptive multimodal interfaces.

Quick Links

-

Researchers

-

Awards

-

AWARD MERL team wins the Generative Data Augmentation of Room Acoustics (GenDARA) 2025 Challenge Date: April 7, 2025

Awarded to: Christopher Ick, Gordon Wichern, Yoshiki Masuyama, François G. Germain, and Jonathan Le Roux

MERL Contacts: Jonathan Le Roux; Yoshiki Masuyama; Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- MERL's Speech & Audio team ranked 1st out of 3 teams in the Generative Data Augmentation of Room Acoustics (GenDARA) 2025 Challenge, which focused on “generating room impulse responses (RIRs) to supplement a small set of measured examples and using the augmented data to train speaker distance estimation (SDE) models". The team was led by MERL intern Christopher Ick, and also included Gordon Wichern, Yoshiki Masuyama, François G. Germain, and Jonathan Le Roux.

The GenDARA Challenge was organized as part of the Generative Data Augmentation (GenDA) workshop at the 2025 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2025), and held on April 7, 2025 in Hyderabad, India. Yoshiki Masuyama presented the team's method, "Data Augmentation Using Neural Acoustic Fields With Retrieval-Augmented Pre-training".

The GenDARA challenge aims to promote the use of generative AI to synthesize RIRs from limited room data, as collecting or simulating RIR datasets at scale remains a significant challenge due to high costs and trade-offs between accuracy and computational efficiency. The challenge asked participants to first develop RIR generation systems capable of expanding a sparse set of labeled room impulse responses by generating RIRs at new source–receiver positions. They were then tasked with using this augmented dataset to train speaker distance estimation systems. Ranking was determined by the overall performance on the downstream SDE task. MERL’s approach to the GenDARA challenge centered on a geometry-aware neural acoustic field model that was first pre-trained on a large external RIR dataset to learn generalizable mappings from 3D room geometry to room impulse responses. For each challenge room, the model was then adapted or fine-tuned using the small number of provided RIRs, enabling high-fidelity generation of RIRs at unseen source–receiver locations. These augmented RIR sets were subsequently used to train the SDE system, improving speaker distance estimation by providing richer and more diverse acoustic training data.

- MERL's Speech & Audio team ranked 1st out of 3 teams in the Generative Data Augmentation of Room Acoustics (GenDARA) 2025 Challenge, which focused on “generating room impulse responses (RIRs) to supplement a small set of measured examples and using the augmented data to train speaker distance estimation (SDE) models". The team was led by MERL intern Christopher Ick, and also included Gordon Wichern, Yoshiki Masuyama, François G. Germain, and Jonathan Le Roux.

-

AWARD MERL team wins the Listener Acoustic Personalisation (LAP) 2024 Challenge Date: August 29, 2024

Awarded to: Yoshiki Masuyama, Gordon Wichern, Francois G. Germain, Christopher Ick, and Jonathan Le Roux

MERL Contacts: Jonathan Le Roux; Gordon Wichern; Yoshiki Masuyama

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- MERL's Speech & Audio team ranked 1st out of 7 teams in Task 2 of the 1st SONICOM Listener Acoustic Personalisation (LAP) Challenge, which focused on "Spatial upsampling for obtaining a high-spatial-resolution HRTF from a very low number of directions". The team was led by Yoshiki Masuyama, and also included Gordon Wichern, Francois Germain, MERL intern Christopher Ick, and Jonathan Le Roux.

The LAP Challenge workshop and award ceremony was hosted by the 32nd European Signal Processing Conference (EUSIPCO 24) on August 29, 2024 in Lyon, France. Yoshiki Masuyama presented the team's method, "Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization", and received the award from Prof. Michele Geronazzo (University of Padova, IT, and Imperial College London, UK), Chair of the Challenge's Organizing Committee.

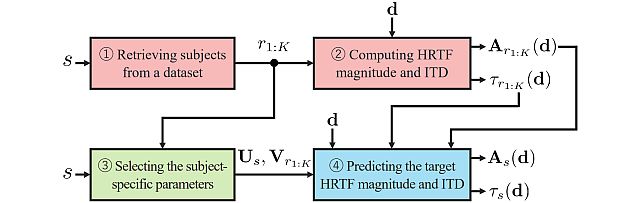

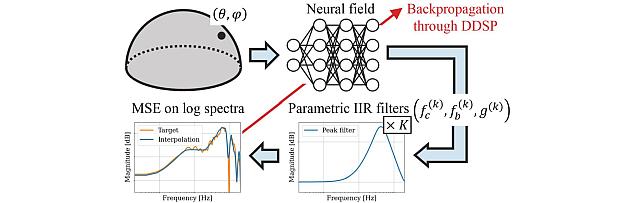

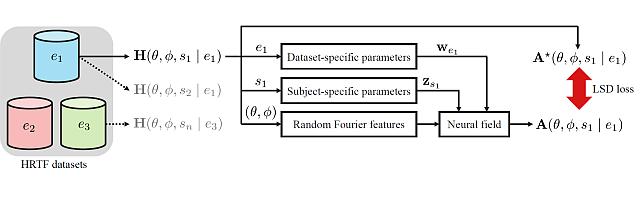

The LAP challenge aims to explore challenges in the field of personalized spatial audio, with the first edition focusing on the spatial upsampling and interpolation of head-related transfer functions (HRTFs). HRTFs with dense spatial grids are required for immersive audio experiences, but their recording is time-consuming. Although HRTF spatial upsampling has recently shown remarkable progress with approaches involving neural fields, HRTF estimation accuracy remains limited when upsampling from only a few measured directions, e.g., 3 or 5 measurements. The MERL team tackled this problem by proposing a retrieval-augmented neural field (RANF). RANF retrieves a subject whose HRTFs are close to those of the target subject at the measured directions from a library of subjects. The HRTF of the retrieved subject at the target direction is fed into the neural field in addition to the desired sound source direction. The team also developed a neural network architecture that can handle an arbitrary number of retrieved subjects, inspired by a multi-channel processing technique called transform-average-concatenate.

- MERL's Speech & Audio team ranked 1st out of 7 teams in Task 2 of the 1st SONICOM Listener Acoustic Personalisation (LAP) Challenge, which focused on "Spatial upsampling for obtaining a high-spatial-resolution HRTF from a very low number of directions". The team was led by Yoshiki Masuyama, and also included Gordon Wichern, Francois Germain, MERL intern Christopher Ick, and Jonathan Le Roux.

-

AWARD Jonathan Le Roux elevated to IEEE Fellow Date: January 1, 2024

Awarded to: Jonathan Le Roux

MERL Contact: Jonathan Le Roux

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- MERL Distinguished Scientist and Speech & Audio Senior Team Leader Jonathan Le Roux has been elevated to IEEE Fellow, effective January 2024, "for contributions to multi-source speech and audio processing."

Mitsubishi Electric celebrated Dr. Le Roux's elevation and that of another researcher from the company, Dr. Shumpei Kameyama, with a worldwide news release on February 15.

Dr. Jonathan Le Roux has made fundamental contributions to the field of multi-speaker speech processing, especially to the areas of speech separation and multi-speaker end-to-end automatic speech recognition (ASR). His contributions constituted a major advance in realizing a practically usable solution to the cocktail party problem, enabling machines to replicate humans’ ability to concentrate on a specific sound source, such as a certain speaker within a complex acoustic scene—a long-standing challenge in the speech signal processing community. Additionally, he has made key contributions to the measures used for training and evaluating audio source separation methods, developing several new objective functions to improve the training of deep neural networks for speech enhancement, and analyzing the impact of metrics used to evaluate the signal reconstruction quality. Dr. Le Roux’s technical contributions have been crucial in promoting the widespread adoption of multi-speaker separation and end-to-end ASR technologies across various applications, including smart speakers, teleconferencing systems, hearables, and mobile devices.

IEEE Fellow is the highest grade of membership of the IEEE. It honors members with an outstanding record of technical achievements, contributing importantly to the advancement or application of engineering, science and technology, and bringing significant value to society. Each year, following a rigorous evaluation procedure, the IEEE Fellow Committee recommends a select group of recipients for elevation to IEEE Fellow. Less than 0.1% of voting members are selected annually for this member grade elevation.

- MERL Distinguished Scientist and Speech & Audio Senior Team Leader Jonathan Le Roux has been elevated to IEEE Fellow, effective January 2024, "for contributions to multi-source speech and audio processing."

See All Awards for Speech & Audio -

-

News & Events

-

NEWS MERL Researchers at NeurIPS 2025 presented 2 conference papers, 5 workshop papers, and organized a workshop. Date: December 2, 2025 - December 7, 2025

Where: San Diego

MERL Contacts: Petros T. Boufounos; Anoop Cherian; Radu Corcodel; Stefano Di Cairano; Chiori Hori; Christopher R. Laughman; Suhas Lohit; Pedro Miraldo; Saviz Mowlavi; Kuan-Chuan Peng; Arvind Raghunathan; Diego Romeres; Abraham P. Vinod; Pu (Perry) Wang

Research Areas: Artificial Intelligence, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & AudioBrief- MERL researchers presented 2 main-conference papers and 5 workshop papers, as well as organized a workshop, at NeurIPS 2025.

Main Conference Papers:

1) Sorachi Kato, Ryoma Yataka, Pu Wang, Pedro Miraldo, Takuya Fujihashi, and Petros Boufounos, "RAPTR: Radar-based 3D Pose Estimation using Transformer", Code available at: https://github.com/merlresearch/radar-pose-transformer

2) Runyu Zhang, Arvind Raghunathan, Jeff Shamma, and Na Li, "Constrained Optimization From a Control Perspective via Feedback Linearization"

Workshop Papers:

1) Yuyou Zhang, Radu Corcodel, Chiori Hori, Anoop Cherian, and Ding Zhao, "SpinBench: Perspective and Rotation as a Lens on Spatial Reasoning in VLMs", NeuriIPS 2025 Workshop on SPACE in Vision, Language, and Embodied AI (SpaVLE) (Best Paper Runner-up)

2) Xiaoyu Xie, Saviz Mowlavi, and Mouhacine Benosman, "Smooth and Sparse Latent Dynamics in Operator Learning with Jerk Regularization", Workshop on Machine Learning and the Physical Sciences (ML4PS)

3) Spencer Hutchinson, Abraham Vinod, François Germain, Stefano Di Cairano, Christopher Laughman, and Ankush Chakrabarty, "Quantile-SMPC for Grid-Interactive Buildings with Multivariate Temporal Fusion Transformers", Workshop on UrbanAI: Harnessing Artificial Intelligence for Smart Cities (UrbanAI)

4) Yuki Shirai, Kei Ota, Devesh Jha, and Diego Romeres, "Sim-to-Real Contact-Rich Pivoting via Optimization-Guided RL with Vision and Touch", Worskhop on Embodied World Models for Decision Making

5) Mark Van der Merwe and Devesh Jha, "In-Context Policy Iteration for Dynamic Manipulation", Workshop on Embodied World Models for Decision Making

Workshop Organized:

MERL members co-organized the Multimodal Algorithmic Reasoning (MAR) Workshop (https://marworkshop.github.io/neurips25/). Organizers: Anoop Cherian (Mitsubishi Electric Research Laboratories), Kuan-Chuan Peng (Mitsubishi Electric Research Laboratories), Suhas Lohit (Mitsubishi Electric Research Laboratories), Honglu Zhou (Salesforce AI Research), Kevin Smith (Massachusetts Institute of Technology), and Joshua B. Tenenbaum (Massachusetts Institute of Technology).

- MERL researchers presented 2 main-conference papers and 5 workshop papers, as well as organized a workshop, at NeurIPS 2025.

-

EVENT SANE 2025 - Speech and Audio in the Northeast Date: Friday, November 7, 2025

Location: Google, New York, NY

MERL Contacts: Jonathan Le Roux; Yoshiki Masuyama

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- SANE 2025, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Friday November 7, 2025 at Google, in New York, NY.

It was the 12th edition in the SANE series of workshops, which started in 2012 and is typically held every year alternately in Boston and New York. Since the first edition, the audience has grown to about 200 participants and 50 posters each year, and SANE has established itself as a vibrant, must-attend event for the speech and audio community across the northeast and beyond.

SANE 2025 featured invited talks by six leading researchers from the Northeast as well as from the wider community: Dan Ellis (Google Deepmind), Leibny Paola Garcia Perera (Johns Hopkins University), Yuki Mitsufuji (Sony AI), Julia Hirschberg (Columbia University), Yoshiki Masuyama (MERL), and Robin Scheibler (Google Deepmind). It also featured a lively poster session with 50 posters.

MERL Speech and Audio Team's Yoshiki Masuyama presented a well-received overview of the team's recent work on "Neural Fields for Spatial Audio Modeling". His talk highlighted how neural fields are reshaping spatial audio research by enabling flexible, data-driven interpolation of head-related transfer functions and room impulse responses. He also discussed the integration of sound-propagation physics into neural field models through physics-informed neural networks, showcasing MERL’s advances at the intersection of acoustics and deep learning.

SANE 2025 was co-organized by Jonathan Le Roux (MERL), Quan Wang (Google Deepmind), and John R. Hershey (Google Deepmind). SANE remained a free event thanks to generous sponsorship by Google, MERL, Apple, Bose, and Carnegie Mellon University.

Slides and videos of the talks are available from the SANE workshop website and via a YouTube playlist.

- SANE 2025, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Friday November 7, 2025 at Google, in New York, NY.

See All News & Events for Speech & Audio -

-

Research Highlights

-

Internships

-

CI0197: Internship - Embodied AI & Humanoid Robotics

-

SA0188: Internship - Audio separation, generation, and analysis

-

CV0267: Internship - Audio-Visual Learning for Spatial Audio Processing

See All Internships for Speech & Audio -

-

Openings

See All Openings at MERL -

Recent Publications

- , "Embracing Cacophony: Explaining and Improving Random Mixing in Music Source Separation", IEEE Open Journal of Signal Processing, DOI: 10.1109/OJSP.2025.3633567, Vol. 6, pp. 1179-1192, January 2026.BibTeX TR2026-012 PDF

- @article{Jeon2026jan,

- author = {Jeon, Chang-Bin and Wichern, Gordon and Germain, François G and {Le Roux}, Jonathan},

- title = {{Embracing Cacophony: Explaining and Improving Random Mixing in Music Source Separation}},

- journal = {IEEE Open Journal of Signal Processing},

- year = 2026,

- volume = 6,

- pages = {1179--1192},

- month = jan,

- doi = {10.1109/OJSP.2025.3633567},

- url = {https://www.merl.com/publications/TR2026-012}

- }

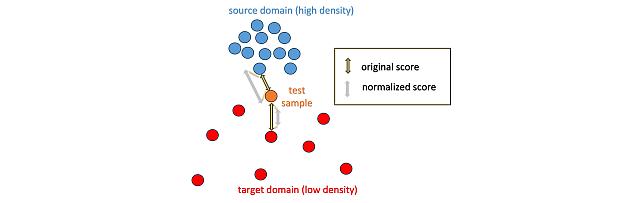

- , "Local Density-Based Anomaly Score Normalization for Domain Generalization", IEEE Transactions on Audio, Speech and Language Processing, January 2026.BibTeX TR2026-010 PDF Software

- @article{Wilkinghoff2026jan,

- author = {Wilkinghoff, Kevin and Yang, Haici and Ebbers, Janek and Germain, François G and Wichern, Gordon and {Le Roux}, Jonathan},

- title = {{Local Density-Based Anomaly Score Normalization for Domain Generalization}},

- journal = {IEEE Transactions on Audio, Speech and Language Processing},

- year = 2026,

- month = jan,

- url = {https://www.merl.com/publications/TR2026-010}

- }

- , "Recent Trends in Distant Conversational Speech Recognition: A Review of CHiME-7 and 8 DASR Challenges", Computer Speech & Language, DOI: 10.1016/j.csl.2025.101901, Vol. 97, pp. 101901, December 2025.BibTeX TR2026-008 PDF

- @article{Cornell2025dec,

- author = {Cornell, Samuele and Boeddeker, Christoph and Park, Taejin and Huang, He and Raj, Desh and Wiesner, Matthew and Masuyama, Yoshiki and Chang, Xuankai and Wang, Zhong-Qiu and Squartini, Stefano and Garcia, Paola and Watanabe, Shinji},

- title = {{Recent Trends in Distant Conversational Speech Recognition: A Review of CHiME-7 and 8 DASR Challenges}},

- journal = {Computer Speech \& Language},

- year = 2025,

- volume = 97,

- pages = 101901,

- month = dec,

- doi = {10.1016/j.csl.2025.101901},

- url = {https://www.merl.com/publications/TR2026-008}

- }

- , "SuDaField: Subject- and Dataset-Aware Neural Field for HRTF Modeling", IEEE Open Journal of Signal Processing, DOI: 10.1109/OJSP.2025.3627073, Vol. 6, pp. 1169-1178, December 2025.BibTeX TR2026-009 PDF

- @article{Masuyama2025dec2,

- author = {Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Ick, Christopher and {Le Roux}, Jonathan},

- title = {{SuDaField: Subject- and Dataset-Aware Neural Field for HRTF Modeling}},

- journal = {IEEE Open Journal of Signal Processing},

- year = 2025,

- volume = 6,

- pages = {1169--1178},

- month = dec,

- doi = {10.1109/OJSP.2025.3627073},

- url = {https://www.merl.com/publications/TR2026-009}

- }

- , "RANF: Neural Field-Based HRTF Spatial Upsampling with Retrieval Augmentation and Parameter Efficient Fine-Tuning", IEEE Open Journal of Signal Processing, DOI: 10.1109/OJSP.2025.3640517, Vol. 7, pp. 32-41, December 2025.BibTeX TR2026-007 PDF Software

- @article{Masuyama2025dec,

- author = {Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Ick, Christopher and {Le Roux}, Jonathan},

- title = {{RANF: Neural Field-Based HRTF Spatial Upsampling with Retrieval Augmentation and Parameter Efficient Fine-Tuning}},

- journal = {IEEE Open Journal of Signal Processing},

- year = 2025,

- volume = 7,

- pages = {32--41},

- month = dec,

- doi = {10.1109/OJSP.2025.3640517},

- url = {https://www.merl.com/publications/TR2026-007}

- }

- , "Robot Confirmation Generation and Action Planning Using Long-context Q-Former Integrated with Multimodal LLM", IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), December 2025.BibTeX TR2025-167 PDF

- @inproceedings{Hori2025dec,

- author = {Hori, Chiori and Masuyama, Yoshiki and Jain, Siddarth and Corcodel, Radu and Jha, Devesh K. and Romeres, Diego and {Le Roux}, Jonathan},

- title = {{Robot Confirmation Generation and Action Planning Using Long-context Q-Former Integrated with Multimodal LLM}},

- booktitle = {IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU)},

- year = 2025,

- month = dec,

- url = {https://www.merl.com/publications/TR2025-167}

- }

- , "Neural Fields for Spatial Audio Modeling," Tech. Rep. TR2025-171, Speech and Audio in the Northeast (SANE), November 2025.BibTeX TR2025-171 PDF

- @techreport{Masuyama2025nov,

- author = {Masuyama, Yoshiki},

- title = {{Neural Fields for Spatial Audio Modeling}},

- institution = {Speech and Audio in the Northeast (SANE)},

- year = 2025,

- month = nov,

- url = {https://www.merl.com/publications/TR2025-171}

- }

- , "Handling Domain Shifts for Anomalous Sound Detection: A Review of DCASE-Related Work", Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), DOI: 10.5281/zenodo.17251589, October 2025, pp. 20-24.BibTeX TR2025-157 PDF

- @inproceedings{Wilkinghoff2025oct,

- author = {Wilkinghoff, Kevin and Fujimura, Takuya and Imoto, Keisuke and {Le Roux}, Jonathan and Tan, Zheng-Hua and Toda, Tomoki},

- title = {{Handling Domain Shifts for Anomalous Sound Detection: A Review of DCASE-Related Work}},

- booktitle = {Workshop on Detection and Classification of Acoustic Scenes and Events (DCASE)},

- year = 2025,

- pages = {20--24},

- month = oct,

- doi = {10.5281/zenodo.17251589},

- isbn = {978-84-09-77652-8},

- url = {https://www.merl.com/publications/TR2025-157}

- }

- , "Embracing Cacophony: Explaining and Improving Random Mixing in Music Source Separation", IEEE Open Journal of Signal Processing, DOI: 10.1109/OJSP.2025.3633567, Vol. 6, pp. 1179-1192, January 2026.

-

Videos

-

Software & Data Downloads

-

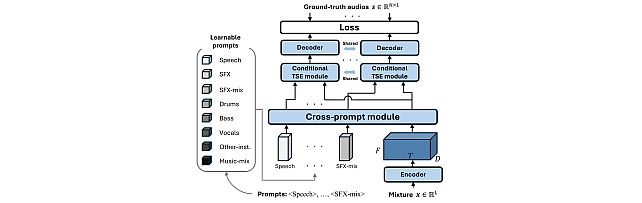

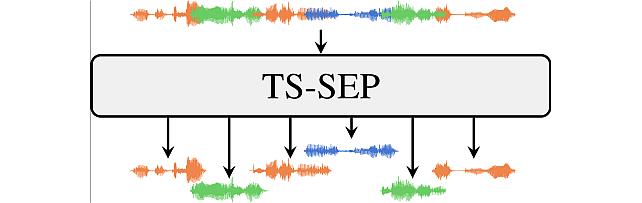

Task-Aware Unified Source Separation -

Local Density-Based Anomaly Score Normalization for Domain Generalization -

Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization -

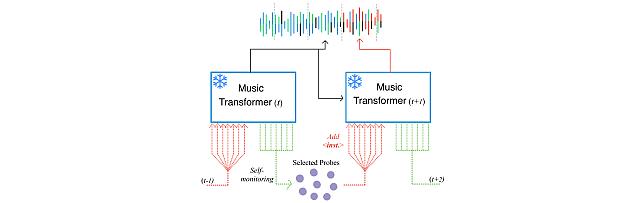

Self-Monitored Inference-Time INtervention for Generative Music Transformers -

Transformer-based model with LOcal-modeling by COnvolution -

Sound Event Bounding Boxes -

Enhanced Reverberation as Supervision -

Neural IIR Filter Field for HRTF Upsampling and Personalization -

Target-Speaker SEParation -

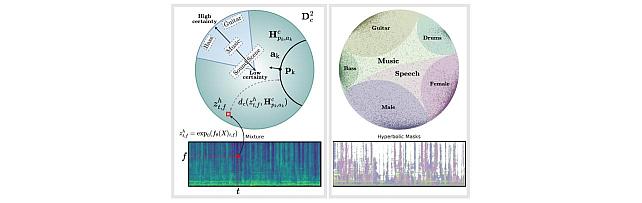

Hyperbolic Audio Source Separation -

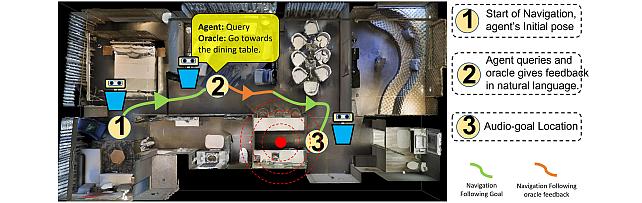

Audio-Visual-Language Embodied Navigation in 3D Environments -

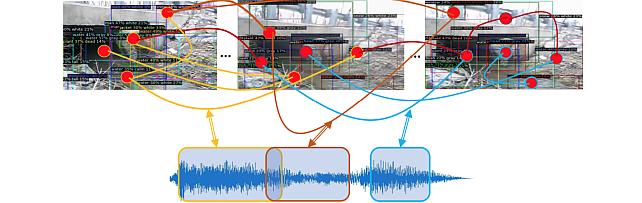

Audio Visual Scene-Graph Segmentor -

Hierarchical Musical Instrument Separation -

Non-negative Dynamical System model -

Subject- and Dataset-Aware Neural Field for HRTF Modeling

-