Robust Machine Learning

Improving natural-robust accuracy tradeoff for adversary-resilient deep learning.

MERL Researchers: Matthew Brand, Anoop Cherian, Toshiaki Koike-Akino, Jonathan Le Roux, Jing Liu, Kieran Parsons, Kuan-Chuan Peng, Ye Wang,

K.J. Kim.

University Consultants: Prof. Shuchin Aeron (Tufts University), Prof. Pierre Moulin (University of Illinois Urbana-Champaign)

Interns: Tejas Jayashankar (University of Illinois Urbana-Champaign), Bryan Liu (University of New South Wales), Adnan Rakin (Arizona State University), Vasu Singla (University of Maryland), Niklas Smedemark-Margulies (Northeastern University), Xi Yu (University of Florida)

Overview

Deep learning is widely applied, yet incredibly vulnerable to adversarial examples, i.e., virtually imperceptible perturbations that fool deep neural networks (DNNs). We aim at developing robust machine learning technology: practical defenses that yield deep learning-based systems that are resilient to adversarial examples, through better theoretical understanding of the fragility of conventional DNNs.

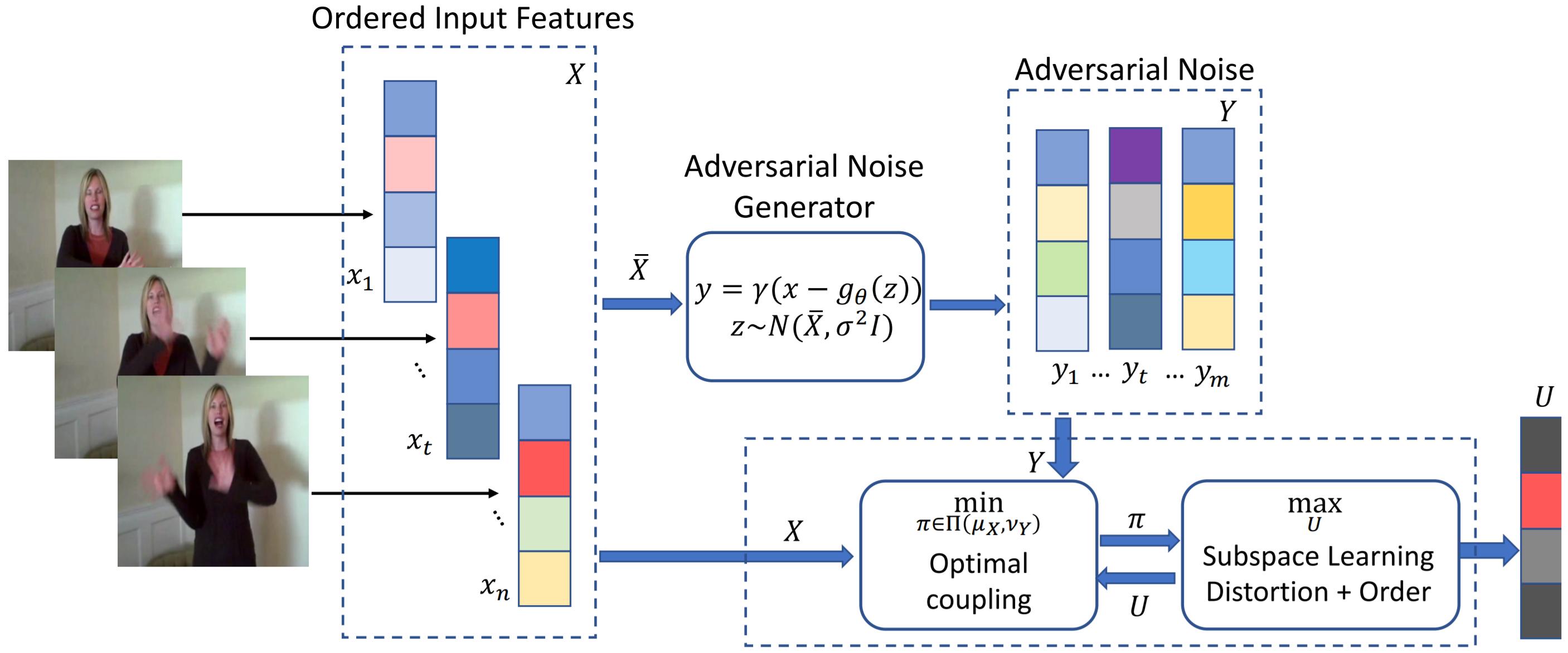

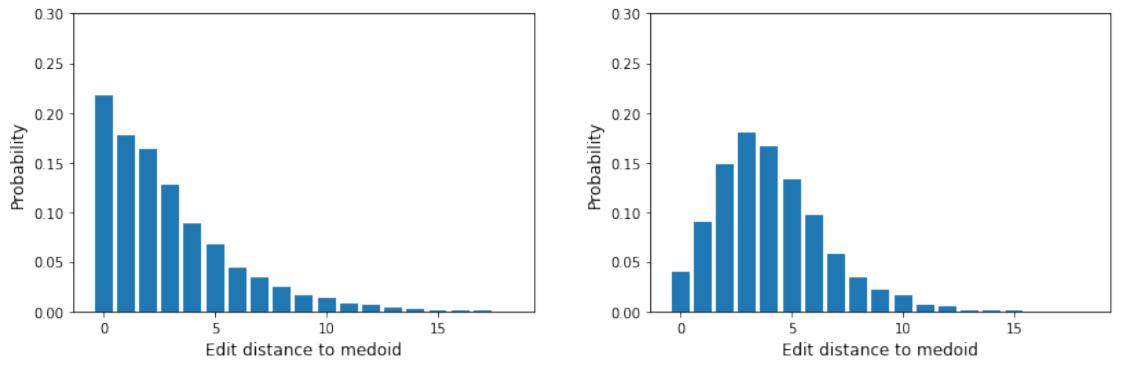

We study the problem of learning compact representations for sequential data. To maximize extraction of implicit spatiotemporal cues, we set the problem within the context of contrastive representation learning and to that end propose a novel objective that includes maximizing the optimal transport distance of the data from an adversarial data distribution. To generate the adversarial distribution, we propose a novel framework connecting Wasserstein GANs with a classifier, allowing a principled mechanism for producing good negative distributions for contrastive learning, which is currently a challenging problem. Our results demonstrate competitive performance on the task of human action recognition in video sequences.

Various adversarial audio attacks have recently been developed to fool automatic speech recognition (ASR) systems. We propose a defense against such attacks based on the uncertainty introduced by dropout in neural networks. We show that our defense is able to detect attacks created through optimized perturbations and frequency masking on a state-of-the-art end-to-end ASR system. Furthermore, the defense can be made robust against attacks that are immune to noise reduction. We test our defense on Mozilla's CommonVoice dataset, the UrbanSound dataset, and an excerpt of the LibriSpeech dataset, showing that it achieves high detection accuracy in a wide range of scenarios.

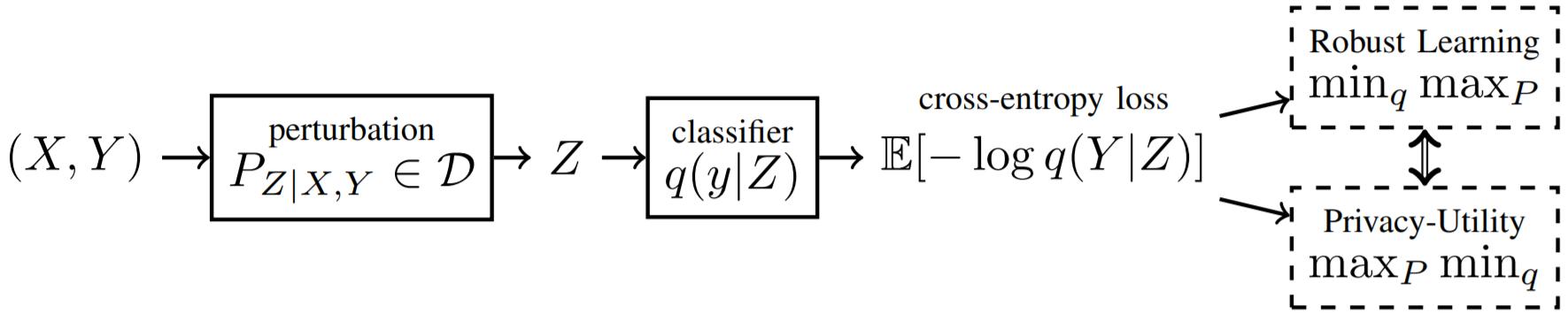

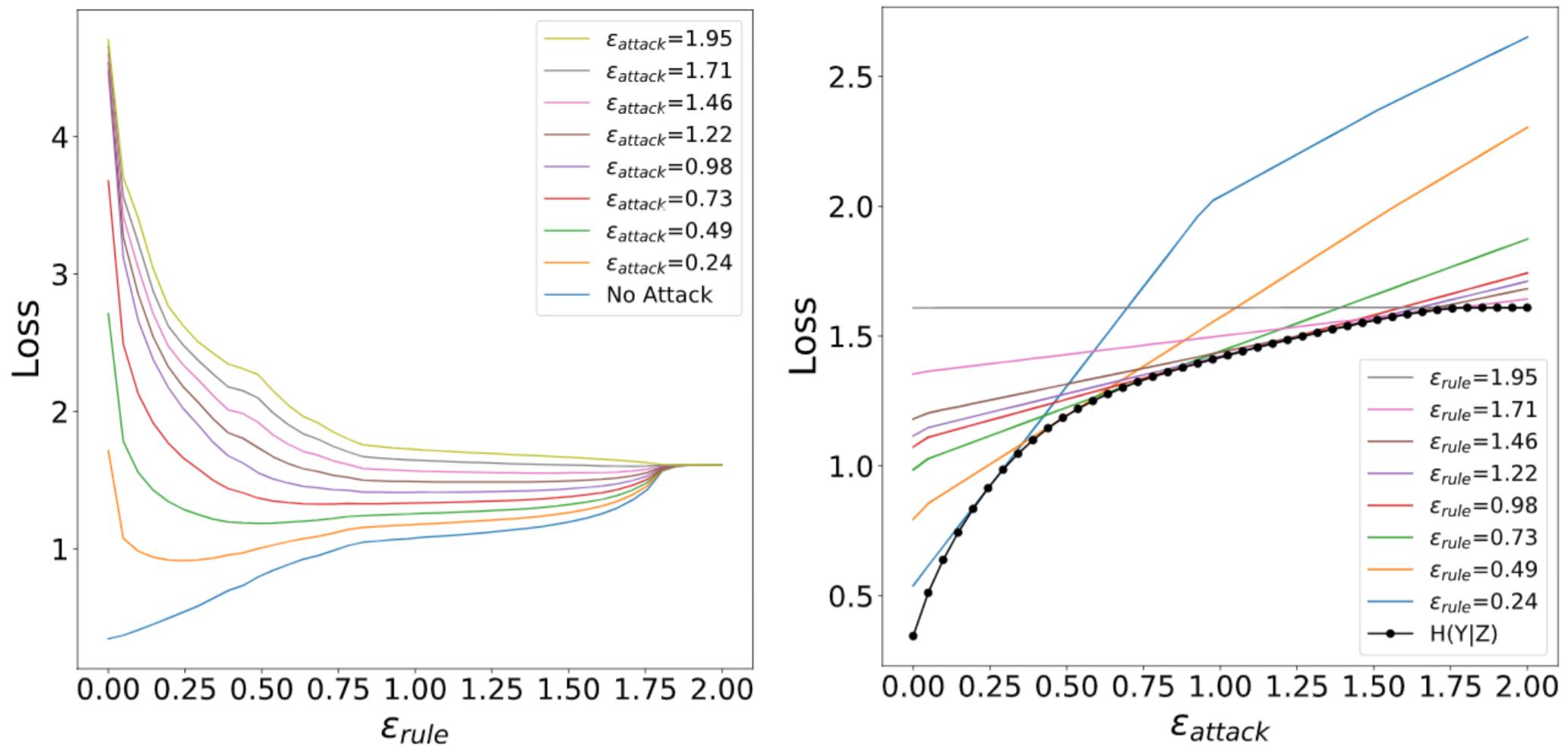

Robust machine learning formulations have emerged to address the prevalent vulnerability of DNNs to adversarial examples. Our work draws the connection between optimal robust learning and the privacy-utility tradeoff problem, a generalization of the rate-distortion problem. The saddle point of the game between a robust classifier and an adversarial perturbation can be found via the solution of a maximum conditional entropy problem. This information-theoretic perspective sheds light on the fundamental tradeoff between robustness and clean data performance, which ultimately arises from the geometric structure of the underlying data distribution and perturbation constraints.

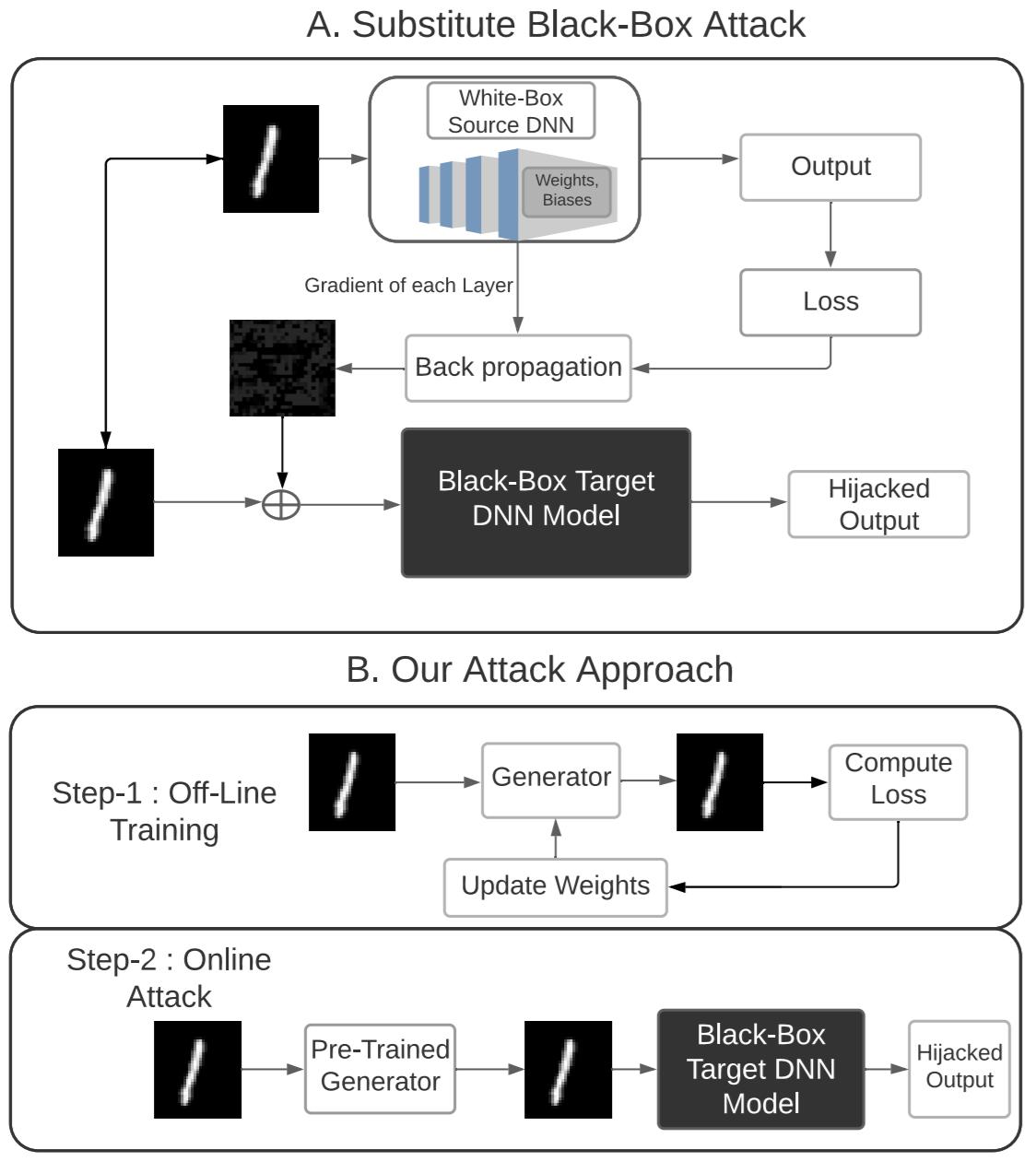

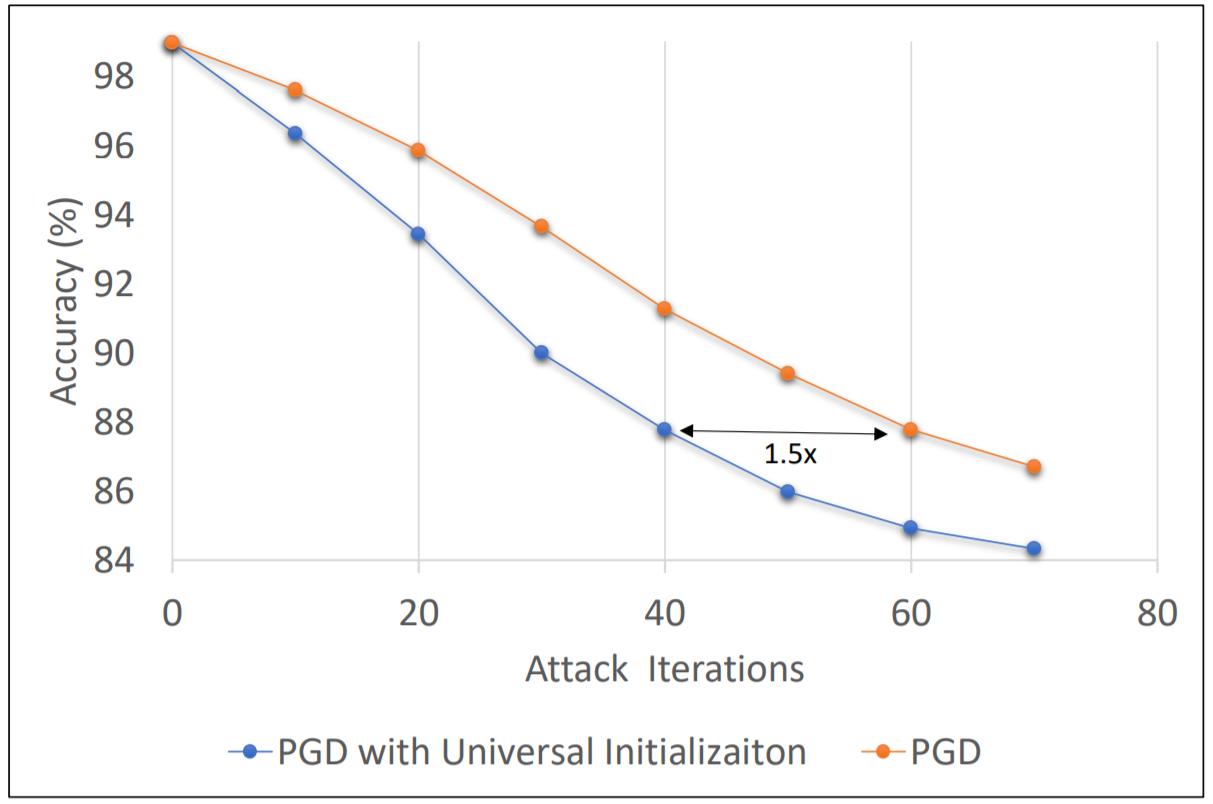

Adversarial examples expose the severe vulnerability of neural network models. However, most of the existing attacks require some form of target model information (i.e., weights/model inquiry/architecture) to improve the efficacy of the attack. We leverage the information-theoretic connections between robust learning and generalized rate-distortion theory to formulate a universal adversarial example (UAE) generation algorithm. Our algorithm trains an offline adversarial generator to minimize the mutual information between the label and perturbed data. At the inference phase, our UAE method can efficiently generate effective adversarial examples without high computation cost. These adversarial examples in turn allow for developing universal defenses through adversarial training. Our experiments demonstrate promising gains in improving the training efficiency of conventional adversarial training.

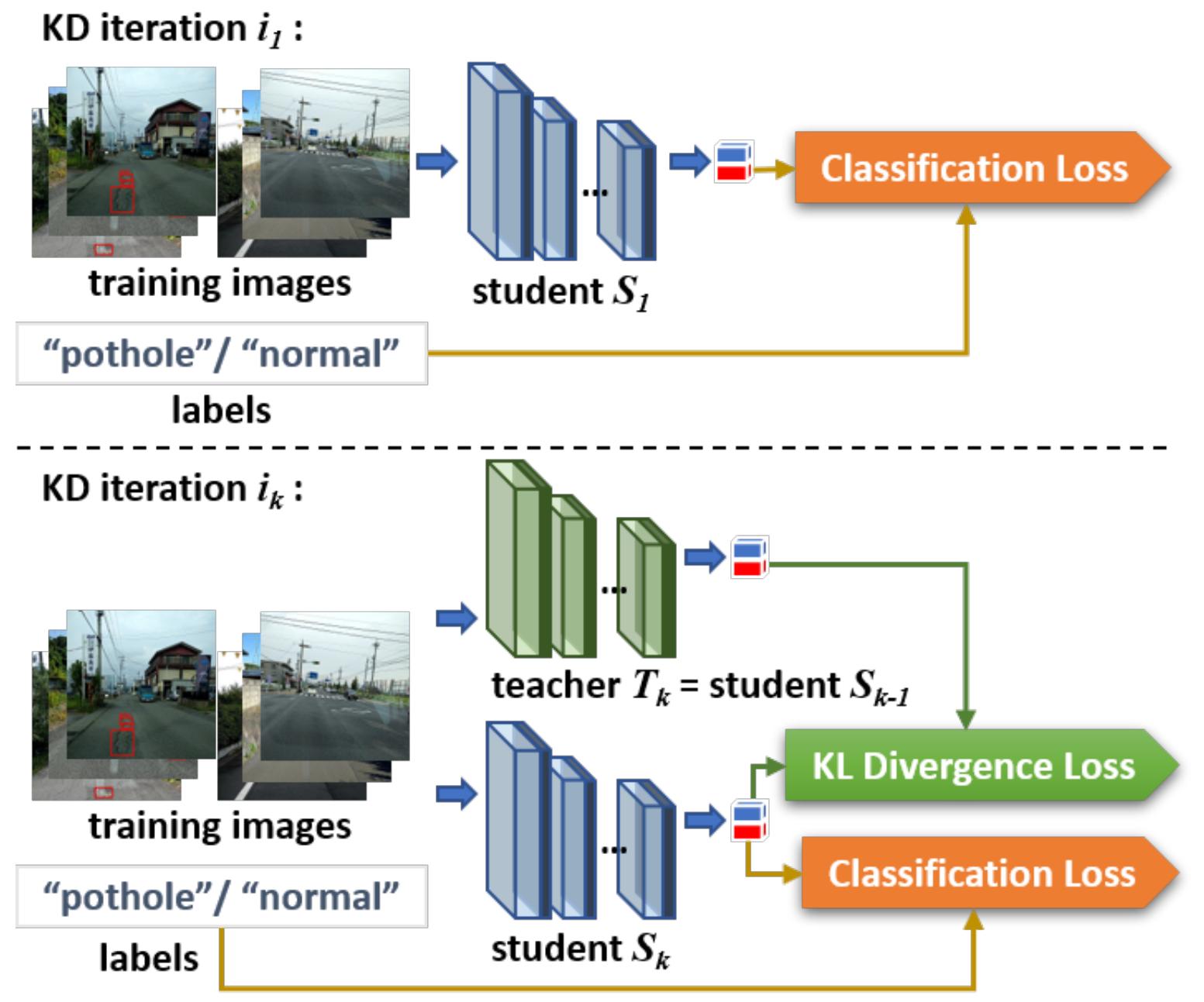

We developed iterative self-knowledge distillation (ISKD) to train lightweight classifiers in the regime of limited computational power and training time. ISKD outperforms the state-of-the-art self-knowledge distillation method on three pothole classification datasets across four lightweight network architectures, demonstrating the advantage of performing self-knowledge distillation iteratively, rather than just once. In addition, we also demonstrate the efficacy of ISKD on six additional datasets associated with generic classification, fine-grained classification, and medical imaging application, which supports that ISKD can serve as a general-purpose performance booster without the need of a given teacher model and extra trainable parameters.

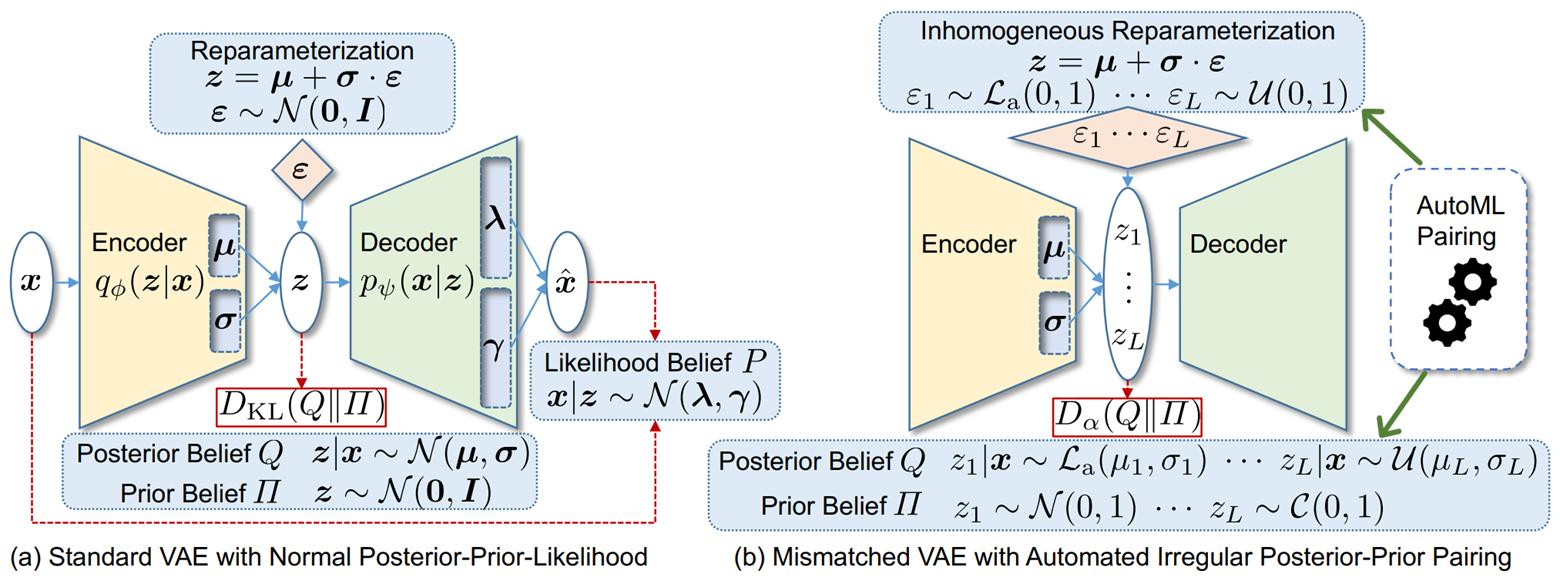

The variational autoencoder (VAE) has been used in a myriad of applications, e.g., dimensionality reduction and generative modeling. We investigated the possibilities of a mismatched VAE, which generalizes the choice of posterior and prior belief distributions. The number of potential combinations to explore grows rapidly, and thus we propose a novel framework called AutoVAE, which searches for better pairing set of posterior-prior beliefs in the context of automated machine learning for hyperparameter optimization. We demonstrate that the proposed irregular pairing offers potential gains in the variational Rényi bound.

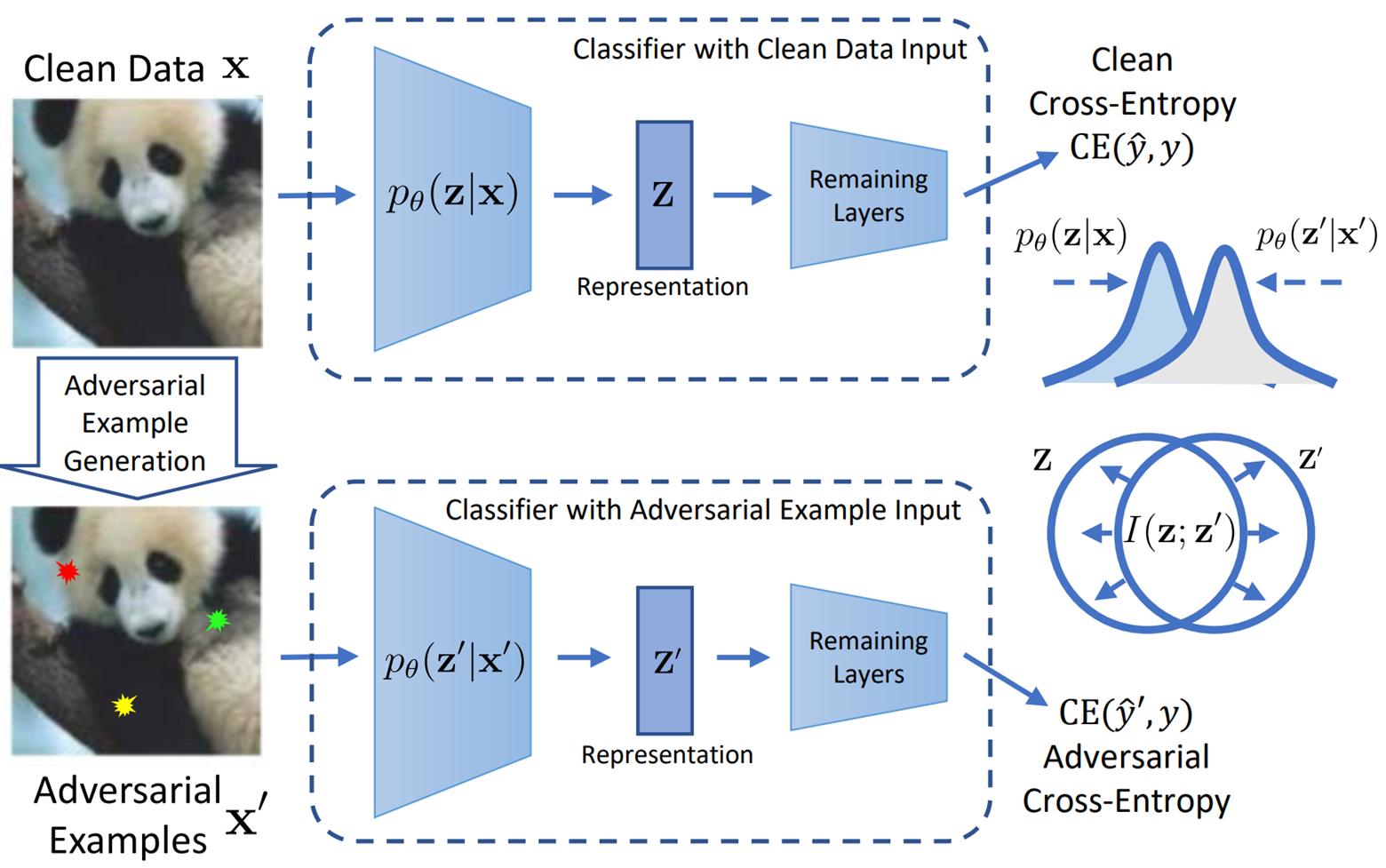

We developed a novel learning method for improving adversarial robustness, while retaining natural accuracy. Motivated by the multi-view information bottleneck framework, our technique learns representations that capture the shared information between clean samples and their corresponding adversarial samples, while discarding the view-specific information. We show that this approach leads to a novel multi-objective loss function, and we provide mathematical motivation for its components towards improving the robust vs. natural accuracy tradeoff. We demonstrate an enhanced tradeoff compared to current state-of-the art methods, with extensive evaluation on various benchmark image datasets and architectures. Ablation studies indicate that learning shared representations is key to improving performance.

Videos

Software & Data Downloads

Adversarially-Contrastive Optimal Transport (ACOT) for studying the problem of learning compact representations for sequential data that captures its implicit spatio-temporal cues.

MERL Publications

- , "Representation Learning via Adversarially-Contrastive Optimal Transport", International Conference on Machine Learning (ICML), Daumé, H. and Singh, A., Eds., July 2020, pp. 10675-10685.BibTeX TR2020-093 PDF Software

- @inproceedings{Cherian2020jul,

- author = {Cherian, Anoop and Aeron, Shuchin},

- title = {{Representation Learning via Adversarially-Contrastive Optimal Transport}},

- booktitle = {International Conference on Machine Learning (ICML)},

- year = 2020,

- editor = {Daumé, H. and Singh, A.},

- pages = {10675--10685},

- month = jul,

- url = {https://www.merl.com/publications/TR2020-093}

- }

- , "Detecting Audio Attacks on ASR Systems with Dropout Uncertainty", Interspeech, DOI: 10.21437/Interspeech.2020-1846, October 2020, pp. 4671-4675.BibTeX TR2020-137 PDF Presentation

- @inproceedings{Jayashankar2020oct,

- author = {Jayashankar, Tejas and {Le Roux}, Jonathan and Moulin, Pierre},

- title = {{Detecting Audio Attacks on ASR Systems with Dropout Uncertainty}},

- booktitle = {Interspeech},

- year = 2020,

- pages = {4671--4675},

- month = oct,

- doi = {10.21437/Interspeech.2020-1846},

- issn = {1990-9772},

- url = {https://www.merl.com/publications/TR2020-137}

- }

- , "Robust Machine Learning via Privacy/Rate-Distortion Theory", IEEE International Symposium on Information Theory (ISIT), DOI: 10.1109/ISIT45174.2021.9517751, July 2021.BibTeX TR2021-082 PDF Video Presentation

- @inproceedings{Wang2021jul,

- author = {Wang, Ye and Aeron, Shuchin and Rakin, Adnan S and Koike-Akino, Toshiaki and Moulin, Pierre},

- title = {{Robust Machine Learning via Privacy/Rate-Distortion Theory}},

- booktitle = {IEEE International Symposium on Information Theory (ISIT)},

- year = 2021,

- month = jul,

- publisher = {IEEE},

- doi = {10.1109/ISIT45174.2021.9517751},

- isbn = {978-1-5386-8210-4},

- url = {https://www.merl.com/publications/TR2021-082}

- }

- , "Towards Universal Adversarial Examples and Defenses", IEEE Information Theory Workshop, DOI: 10.1109/ITW48936.2021.9611439, October 2021.BibTeX TR2021-125 PDF Video

- @inproceedings{Rakin2021oct,

- author = {Rakin, Adnan S and Wang, Ye and Aeron, Shuchin and Koike-Akino, Toshiaki and Moulin, Pierre and Parsons, Kieran},

- title = {{Towards Universal Adversarial Examples and Defenses}},

- booktitle = {IEEE Information Theory Workshop},

- year = 2021,

- month = oct,

- publisher = {IEEE},

- doi = {10.1109/ITW48936.2021.9611439},

- isbn = {978-1-6654-0312-2},

- url = {https://www.merl.com/publications/TR2021-125}

- }

- , "Iterative Self Knowledge Distillation -- From Pothole Classification To Fine-Grained And COVID Recognition", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Gan, W.-S. and Ma, K. K., Eds., DOI: 10.1109/ICASSP43922.2022.9746470, April 2022, pp. 3139-3143.BibTeX TR2022-020 PDF Video Presentation

- @inproceedings{Peng2022apr,

- author = {Peng, Kuan-Chuan},

- title = {{Iterative Self Knowledge Distillation --- From Pothole Classification To Fine-Grained And COVID Recognition}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2022,

- editor = {Gan, W.-S. and Ma, K. K.},

- pages = {3139--3143},

- month = apr,

- publisher = {IEEE},

- doi = {10.1109/ICASSP43922.2022.9746470},

- issn = {1520-6149},

- isbn = {978-1-6654-0541-6},

- url = {https://www.merl.com/publications/TR2022-020}

- }

- , "AutoVAE: Mismatched Variational Autoencoder with Irregular Posterior Prior Pairing", IEEE International Symposium on Information Theory (ISIT), DOI: 10.1109/ISIT50566.2022.9834769, July 2022.BibTeX TR2022-071 PDF Video Presentation

- @inproceedings{Koike-Akino2022jul,

- author = {Koike-Akino, Toshiaki and Wang, Ye},

- title = {{AutoVAE: Mismatched Variational Autoencoder with Irregular Posterior Prior Pairing}},

- booktitle = {IEEE International Symposium on Information Theory (ISIT)},

- year = 2022,

- month = jul,

- publisher = {IEEE},

- doi = {10.1109/ISIT50566.2022.9834769},

- issn = {2157-8117},

- isbn = {978-1-6654-2159-1},

- url = {https://www.merl.com/publications/TR2022-071}

- }

- , "Data Privacy and Protection on Deep Leakage from Gradients by Layer-Wise Pruning", IEEE Information Theory and Applications Workshop (ITA), June 2022.BibTeX TR2022-080 PDF Presentation

- @inproceedings{Liu2022jun,

- author = {Liu, Bryan and Koike-Akino, Toshiaki and Wang, Ye and Kim, Kyeong Jin and Brand, Matthew and Aeron, Shuchin and Parsons, Kieran},

- title = {{Data Privacy and Protection on Deep Leakage from Gradients by Layer-Wise Pruning}},

- booktitle = {IEEE Information Theory and Applications Workshop (ITA)},

- year = 2022,

- month = jun,

- url = {https://www.merl.com/publications/TR2022-080}

- }

- , "Improving Adversarial Robustness by Learning Shared Information", Pattern Recognition, DOI: 10.1016/j.patcog.2022.109054, Vol. 134, pp. 109054, November 2022.BibTeX TR2022-141 PDF

- @article{Yu2022nov,

- author = {Yu, Xi and Smedemark-Margulies, Niklas and Aeron, Shuchin and Koike-Akino, Toshiaki and Moulin, Pierre and Brand, Matthew and Parsons, Kieran and Wang, Ye},

- title = {{Improving Adversarial Robustness by Learning Shared Information}},

- journal = {Pattern Recognition},

- year = 2022,

- volume = 134,

- pages = 109054,

- month = nov,

- doi = {10.1016/j.patcog.2022.109054},

- issn = {0031-3203},

- url = {https://www.merl.com/publications/TR2022-141}

- }

- , "Learning with noisy labels using low-dimensional model trajectory", NeurIPS 2022 Workshop on Distribution Shifts (DistShift), December 2022.BibTeX TR2022-156 PDF

- @inproceedings{Singla2022dec,

- author = {Singla, Vasu and Aeron, Shuchin and Koike-Akino, Toshiaki and Parsons, Kieran and Brand, Matthew and Wang, Ye},

- title = {{Learning with noisy labels using low-dimensional model trajectory}},

- booktitle = {NeurIPS 2022 Workshop on Distribution Shifts: Connecting Methods and Applications},

- year = 2022,

- month = dec,

- publisher = {OpenReview},

- url = {https://www.merl.com/publications/TR2022-156}

- }