TR2025-029

Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization

-

- , "Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), DOI: 10.1109/ICASSP49660.2025.10889481, April 2025.BibTeX TR2025-029 PDF Software

- @inproceedings{Masuyama2025mar,

- author = {{{Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Ick, Christopher and Le Roux, Jonathan}}},

- title = {{{Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization}}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2025,

- month = apr,

- doi = {10.1109/ICASSP49660.2025.10889481},

- url = {https://www.merl.com/publications/TR2025-029}

- }

- , "Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), DOI: 10.1109/ICASSP49660.2025.10889481, April 2025.

-

MERL Contacts:

-

Research Areas:

Abstract:

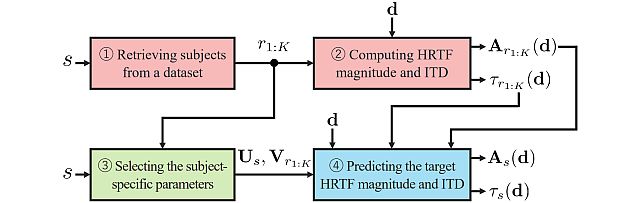

Head-related transfer functions (HRTFs) with dense spatial grids are desired for immersive binaural audio generation, but their recording is time-consuming. Although HRTF spatial upsampling has shown remarkable progress with neural fields, spatial upsampling only from a few measured directions, e.g., 3 or 5 measurements, is still challenging. To tackle this problem, we propose a retrieval-augmented neural field (RANF). RANF retrieves a subject whose HRTFs are close to those of the target subject from a dataset. The HRTF of the retrieved subject at the desired direction is fed into the neural field in addition to the sound source direction itself. Furthermore, we present a neural network that can efficiently handle multiple retrieved subjects, inspired by a multi- channel processing technique called transform-average-concatenate. Our experiments confirm the benefits of RANF on the SONICOM dataset, and it is a key component in the winning solution of Task 2 of the listener acoustic personalization challenge 2024.

Software & Data Downloads

Related News & Events

-

EVENT SANE 2025 - Speech and Audio in the Northeast Date: Friday, November 7, 2025

Location: Google, New York, NY

MERL Contacts: Jonathan Le Roux; Yoshiki Masuyama

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- SANE 2025, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Friday November 7, 2025 at Google, in New York, NY.

It was the 12th edition in the SANE series of workshops, which started in 2012 and is typically held every year alternately in Boston and New York. Since the first edition, the audience has grown to about 200 participants and 50 posters each year, and SANE has established itself as a vibrant, must-attend event for the speech and audio community across the northeast and beyond.

SANE 2025 featured invited talks by six leading researchers from the Northeast as well as from the wider community: Dan Ellis (Google Deepmind), Leibny Paola Garcia Perera (Johns Hopkins University), Yuki Mitsufuji (Sony AI), Julia Hirschberg (Columbia University), Yoshiki Masuyama (MERL), and Robin Scheibler (Google Deepmind). It also featured a lively poster session with 50 posters.

MERL Speech and Audio Team's Yoshiki Masuyama presented a well-received overview of the team's recent work on "Neural Fields for Spatial Audio Modeling". His talk highlighted how neural fields are reshaping spatial audio research by enabling flexible, data-driven interpolation of head-related transfer functions and room impulse responses. He also discussed the integration of sound-propagation physics into neural field models through physics-informed neural networks, showcasing MERL’s advances at the intersection of acoustics and deep learning.

SANE 2025 was co-organized by Jonathan Le Roux (MERL), Quan Wang (Google Deepmind), and John R. Hershey (Google Deepmind). SANE remained a free event thanks to generous sponsorship by Google, MERL, Apple, Bose, and Carnegie Mellon University.

Slides and videos of the talks are available from the SANE workshop website and via a YouTube playlist.

- SANE 2025, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, was held on Friday November 7, 2025 at Google, in New York, NY.

-

EVENT MERL Contributes to ICASSP 2025 Date: Sunday, April 6, 2025 - , April 11, 2025

Location: Hyderabad, India

MERL Contacts: Wael H. Ali; Petros T. Boufounos; Radu Corcodel; Chiori Hori; Siddarth Jain; Toshiaki Koike-Akino; Jonathan Le Roux; Yanting Ma; Hassan Mansour; Yoshiki Masuyama; Joshua Rapp; Diego Romeres; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Artificial Intelligence, Communications, Computational Sensing, Electronic and Photonic Devices, Machine Learning, Robotics, Signal Processing, Speech & AudioBrief- MERL has made numerous contributions to both the organization and technical program of ICASSP 2025, which is being held in Hyderabad, India from April 6-11, 2025.

Sponsorship

MERL is proud to be a Silver Patron of the conference and will participate in the student job fair on Thursday, April 10. Please join this session to learn more about employment opportunities at MERL, including openings for research scientists, post-docs, and interns.

MERL is pleased to be the sponsor of two IEEE Awards that will be presented at the conference. We congratulate Prof. Björn Erik Ottersten, the recipient of the 2025 IEEE Fourier Award for Signal Processing, and Prof. Shrikanth Narayanan, the recipient of the 2025 IEEE James L. Flanagan Speech and Audio Processing Award. Both awards will be presented in-person at ICASSP by Anthony Vetro, MERL President & CEO.

Technical Program

MERL is presenting 15 papers in the main conference on a wide range of topics including source separation, sound event detection, sound anomaly detection, speaker diarization, music generation, robot action generation from video, indoor airflow imaging, WiFi sensing, Doppler single-photon Lidar, optical coherence tomography, and radar imaging. Another paper on spatial audio will be presented at the Generative Data Augmentation for Real-World Signal Processing Applications (GenDA) Satellite Workshop.

MERL Researchers Petros Boufounos and Hassan Mansour will present a Tutorial on “Computational Methods in Radar Imaging” in the afternoon of Monday, April 7.

Petros Boufounos will also be giving an industry talk on Thursday April 10 at 12pm, on “A Physics-Informed Approach to Sensing".

About ICASSP

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event has been attracting more than 4000 participants each year.

- MERL has made numerous contributions to both the organization and technical program of ICASSP 2025, which is being held in Hyderabad, India from April 6-11, 2025.

Related Publications

- @article{Masuyama2025dec,

- author = {Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Ick, Christopher and {Le Roux}, Jonathan},

- title = {{RANF: Neural Field-Based HRTF Spatial Upsampling with Retrieval Augmentation and Parameter Efficient Fine-Tuning}},

- journal = {IEEE Open Journal of Signal Processing},

- year = 2025,

- volume = 7,

- pages = {32--41},

- month = dec,

- doi = {10.1109/OJSP.2025.3640517},

- url = {https://www.merl.com/publications/TR2026-007}

- }

- @article{Masuyama2025jan,

- author = {Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Ick, Christopher and {Le Roux}, Jonathan},

- title = {{Retrieval-Augmented Neural Field for HRTF Upsampling and Personalization}},

- journal = {arXiv},

- year = 2025,

- month = jan,

- url = {https://arxiv.org/abs/2501.13017}

- }