TR2022-011

MOST-GAN: 3D Morphable StyleGAN for Disentangled Face Image Manipulation

-

- , "MOST-GAN: 3D Morphable StyleGAN for Disentangled Face Image Manipulation", AAAI Conference on Artificial Intelligence, DOI: 10.1609/aaai.v36i2.20091, February 2022, pp. 1962-1971.BibTeX TR2022-011 PDF Video Data Presentation

- @inproceedings{Medin2022feb,

- author = {Medin, Safa C. and Egger, Bernhard and Cherian, Anoop and Wang, Ye and Tenenbaum, Joshua B. and Liu, Xiaoming and Marks, Tim K.},

- title = {{MOST-GAN: 3D Morphable StyleGAN for Disentangled Face Image Manipulation}},

- booktitle = {AAAI Conference on Artificial Intelligence},

- year = 2022,

- pages = {1962--1971},

- month = feb,

- doi = {10.1609/aaai.v36i2.20091},

- url = {https://www.merl.com/publications/TR2022-011}

- }

- , "MOST-GAN: 3D Morphable StyleGAN for Disentangled Face Image Manipulation", AAAI Conference on Artificial Intelligence, DOI: 10.1609/aaai.v36i2.20091, February 2022, pp. 1962-1971.

-

MERL Contacts:

-

Research Areas:

Abstract:

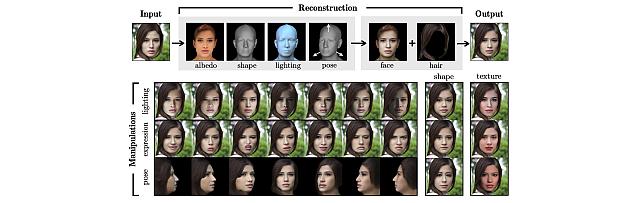

Recent advances in generative adversarial networks (GANs) have led to remarkable achievements in face image synthesis. While methods that use style-based GANs can generate strikingly photorealistic face images, it is often difficult to control the characteristics of the generated faces in a meaningful and disentangled way. Prior approaches aim to achieve such semantic control and disentanglement within the latent space of a previously trained GAN. In contrast, we propose a framework that a priori models physical attributes of the face such as 3D shape, albedo, pose, and lighting explicitly, thus providing disentanglement by design. Our method, MOST-GAN, integrates the expressive power and photorealism of style-based GANs with the physical disentanglement and flexibility of nonlinear 3D morphable models, which we couple with a state-of-the-art 2D hair manipulation network. MOST-GAN achieves photorealistic manipulation of portrait images with fully disentangled 3D control over their physical attributes, enabling extreme manipulation of lighting, facial expression, and pose variations up to full profile view.

Software & Data Downloads

Related Video

Related Publication

- @article{Medin2021oct,

- author = {Medin, Safa C. and Egger, Bernhard and Cherian, Anoop and Wang, Ye and Tenenbaum, Joshua B. and Liu, Xiaoming and Marks, Tim K.},

- title = {{MOST-GAN: 3D Morphable StyleGAN for Disentangled Face Image Manipulation}},

- journal = {arXiv},

- year = 2021,

- month = oct,

- url = {https://arxiv.org/abs/2111.01048}

- }