TR2021-097

InSeGAN: A Generative Approach to Segmenting Identical Instances in Depth Images

-

- , "InSeGAN: A Generative Approach to Segmenting Identical Instances in Depth Images", IEEE International Conference on Computer Vision (ICCV), October 2021, pp. 10023-10032.BibTeX TR2021-097 PDF Video Data Software Presentation

- @inproceedings{Cherian2021oct,

- author = {Cherian, Anoop and Pais, Goncalo and Jain, Siddarth and Marks, Tim K. and Sullivan, Alan},

- title = {{InSeGAN: A Generative Approach to Segmenting Identical Instances in Depth Images}},

- booktitle = {IEEE International Conference on Computer Vision (ICCV)},

- year = 2021,

- pages = {10023--10032},

- month = oct,

- publisher = {CVF},

- url = {https://www.merl.com/publications/TR2021-097}

- }

- , "InSeGAN: A Generative Approach to Segmenting Identical Instances in Depth Images", IEEE International Conference on Computer Vision (ICCV), October 2021, pp. 10023-10032.

-

MERL Contacts:

-

Research Areas:

Abstract:

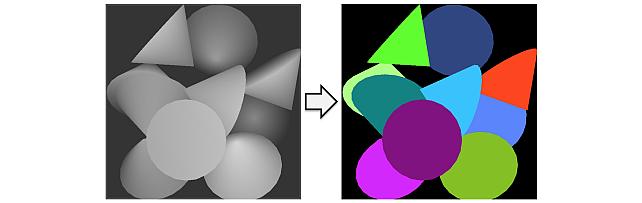

In this paper, we present InSeGAN, an unsupervised 3D generative adversarial network (GAN) for segmenting (nearly) identical instances of rigid objects in depth images. Using an analysis-by-synthesis approach, we design a novel GAN architecture to synthesize a multiple-instance depth image with independent control over each instance.

InSeGAN takes in a set of code vectors (e.g., random noise vectors), each encoding the 3D pose of an object that is represented by a learned implicit object template. The generator has two distinct modules. The first module, the instance feature generator, uses each encoded pose to transform the implicit template into a feature map representation of each object instance. The second module, the depth image renderer, aggregates all of the single-instance feature maps output by the first module and generates a multiple-instance depth image. A discriminator distinguishes the generated multiple-instance depth images from the distribution of true depth images. To use our model for instance segmentation, we propose an instance pose encoder that learns to take in a generated depth image and reproduce the pose code vectors for all of the object instances. To evaluate our approach, we introduce a new synthetic dataset, “Insta-10,” consisting of 100,000 depth images each with 5 instances of an object from one of 10 classes. Our experiments on Insta-10, as well as on real-world noisy depth images, show that InSeGAN achieves state-of-the-art performance, often outperforming prior methods by large margins.