TR2022-135

Cross-Modal Knowledge Transfer Without Task-Relevant Source Data

-

- , "Cross-Modal Knowledge Transfer Without Task-Relevant Source Data", European Conference on Computer Vision (ECCV), Avidan, S and Brostow, G and Cisse M and Farinella, G.M. and Hassner T., Eds., DOI: 10.1007/978-3-031-19830-4_7, October 2022, pp. 111-127.BibTeX TR2022-135 PDF Video Software Presentation

- @inproceedings{Ahmed2022oct,

- author = {Ahmed, Sk Miraj and Lohit, Suhas and Peng, Kuan-Chuan and Jones, Michael J. and Roy Chowdhury, Amit K.},

- title = {{Cross-Modal Knowledge Transfer Without Task-Relevant Source Data}},

- booktitle = {European Conference on Computer Vision (ECCV)},

- year = 2022,

- editor = {Avidan, S and Brostow, G and Cisse M and Farinella, G.M. and Hassner T.},

- pages = {111--127},

- month = oct,

- publisher = {Springer},

- doi = {10.1007/978-3-031-19830-4_7},

- isbn = {978-3-031-19830-4},

- url = {https://www.merl.com/publications/TR2022-135}

- }

- , "Cross-Modal Knowledge Transfer Without Task-Relevant Source Data", European Conference on Computer Vision (ECCV), Avidan, S and Brostow, G and Cisse M and Farinella, G.M. and Hassner T., Eds., DOI: 10.1007/978-3-031-19830-4_7, October 2022, pp. 111-127.

-

MERL Contacts:

-

Research Areas:

Abstract:

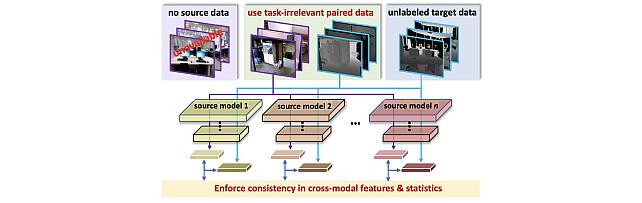

Cost-effective depth and infrared sensors as alternatives to usual RGB sensors are now a reality, and have some advantages over RGB in domains like autonomous navigation and remote sensing. As such, building computer vision and deep learning systems for depth and infrared data are crucial. However, large labeled datasets for these modal- ities are still lacking. In such cases, transferring knowledge from a neural network trained on a well-labeled large dataset in the source modality (RGB) to a neural network that works on a target modality (depth, infrared, etc.) is of great value. For reasons like memory and privacy, it may not be possible to access the source data, and knowledge transfer needs to work with only the source models. We describe an effective solu- tion, SOCKET: SOurce-free Cross-modal KnowledgE Transfer for this challenging task of transferring knowledge from one source modality to a different target modality without access to task-relevant source data. The framework reduces the modality gap using paired task-irrelevant data, as well as by matching the mean and variance of the target features with the batch-norm statistics that are present in the source models. We show through extensive experiments that our method significantly outperforms existing source-free methods for classification tasks which do not account for the modality gap.

Software & Data Downloads

Related Video

Related Publication

- @article{Ahmed2022sep,

- author = {Ahmed, Sk Miraj and Lohit, Suhas and Peng, Kuan-Chuan and Jones, Michael J. and Roy-Chowdhury, Amit K},

- title = {{Cross-Modal Knowledge Transfer Without Task-Relevant Source Data}},

- journal = {arXiv},

- year = 2022,

- month = sep,

- url = {https://arxiv.org/abs/2209.04027}

- }