- Date & Time: Tuesday, September 28, 2021; 1:00 PM EST

Speaker: Dr. Ruohan Gao, Stanford University

MERL Host: Gordon Wichern

Research Areas: Computer Vision, Machine Learning, Speech & Audio

Abstract  While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

-

- Date: July 26, 2021

MERL Contacts: Petros T. Boufounos; Jonathan Le Roux; Philip V. Orlik; Anthony Vetro

Research Areas: Signal Processing, Speech & Audio

Brief - IEEE has announced that the recipients of the 2022 IEEE James L. Flanagan Speech and Audio Processing Award will be Hervé Bourlard (EPFL/Idiap Research Institute) and Nelson Morgan (ICSI), "For contributions to neural networks for statistical speech recognition," and the recipient of the 2022 IEEE Fourier Award for Signal Processing will be Ali Sayed (EPFL), "For contributions to the theory and practice of adaptive signal processing." More details about the contributions of Prof. Bourlard and Prof. Morgan can be found in the announcements by ICSI and EPFL, and those of Prof. Sayed in EPFL's announcement. Mitsubishi Electric Research Laboratories (MERL) has recently become the new sponsor of these two prestigious awards, and extends our warmest congratulations to all of the 2022 award recipients.

The IEEE Board of Directors established the IEEE James L. Flanagan Speech and Audio Processing Award in 2002 for outstanding contributions to the advancement of speech and/or audio signal processing, while the IEEE Fourier Award for Signal Processing was established in 2012 for outstanding contribution to the advancement of signal processing, other than in the areas of speech and audio processing. Both awards have recognized the contributions of some of the most renowned pioneers and leaders in their respective fields. MERL is proud to support the recognition of outstanding contributions to the signal processing field through its sponsorship of these awards.

-

- Date: July 9, 2021

MERL Contacts: Petros T. Boufounos; Jonathan Le Roux; Philip V. Orlik; Anthony Vetro

Research Areas: Signal Processing, Speech & Audio

Brief - Mitsubishi Electric Research Laboratories (MERL) has become the new sponsor of two prestigious IEEE Technical Field Awards in Signal Processing, the IEEE James L. Flanagan Speech and Audio Processing Award and the IEEE Fourier Award for Signal Processing, for the years 2022-2031. "MERL is proud to support the recognition of outstanding contributions to signal processing by sponsoring both the IEEE James L. Flanagan Speech and Audio Processing Award and the IEEE Fourier Award for Signal Processing. These awards celebrate the creativity and innovation in the field that touch many aspects of our lives and drive our society forward" said Dr. Anthony Vetro, VP and Director at MERL.

The IEEE Board of Directors established the IEEE James L. Flanagan Speech and Audio Processing Award in 2002 for outstanding contributions to the advancement of speech and/or audio signal processing, while the IEEE Fourier Award for Signal Processing was established in 2012 for outstanding contribution to the advancement of signal processing, other than in the areas of speech and audio processing. Both awards have since recognized the contributions of some of the most renowned pioneers and leaders in their respective fields.

By underwriting these IEEE Technical Field Awards, MERL continues to make a mark by supporting the advancement of technology that makes lasting changes in the world.

-

- Date: February 15, 2021

Where: The 2nd International Symposium on AI Electronics

MERL Contact: Chiori Hori

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Brief - Chiori Hori, a Senior Principal Researcher in MERL's Speech and Audio Team, will be a keynote speaker at the 2nd International Symposium on AI Electronics, alongside Alex Acero, Senior Director of Apple Siri, Roberto Cipolla, Professor of Information Engineering at the University of Cambridge, and Hiroshi Amano, Professor at Nagoya University and winner of the Nobel prize in Physics for his work on blue light-emitting diodes. The symposium, organized by Tohoku University, will be held online on February 15, 2021, 10am-4pm (JST).

Chiori's talk, titled "Human Perspective Scene Understanding via Multimodal Sensing", will present MERL's work towards the development of scene-aware interaction. One important piece of technology that is still missing for human-machine interaction is natural and context-aware interaction, where machines understand their surrounding scene from the human perspective, and they can share their understanding with humans using natural language. To bridge this communications gap, MERL has been working at the intersection of research fields such as spoken dialog, audio-visual understanding, sensor signal understanding, and robotics technologies in order to build a new AI paradigm, called scene-aware interaction, that enables machines to translate their perception and understanding of a scene and respond to it using natural language to interact more effectively with humans. In this talk, the technologies will be surveyed, and an application for future car navigation will be introduced.

-

- Date & Time: Wednesday, December 9, 2020; 1:00-5:00PM EST

Location: Virtual

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

-

- Date: October 15, 2020

Awarded to: Ethan Manilow, Gordon Wichern, Jonathan Le Roux

MERL Contacts: Jonathan Le Roux; Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - Former MERL intern Ethan Manilow and MERL researchers Gordon Wichern and Jonathan Le Roux won Best Poster Award and Best Video Award at the 2020 International Society for Music Information Retrieval Conference (ISMIR 2020) for the paper "Hierarchical Musical Source Separation". The conference was held October 11-14 in a virtual format. The Best Poster Awards and Best Video Awards were awarded by popular vote among the conference attendees.

The paper proposes a new method for isolating individual sounds in an audio mixture that accounts for the hierarchical relationship between sound sources. Many sounds we are interested in analyzing are hierarchical in nature, e.g., during a music performance, a hi-hat note is one of many such hi-hat notes, which is one of several parts of a drumkit, itself one of many instruments in a band, which might be playing in a bar with other sounds occurring. Inspired by this, the paper re-frames the audio source separation problem as hierarchical, combining similar sounds together at certain levels while separating them at other levels, and shows on a musical instrument separation task that a hierarchical approach outperforms non-hierarchical models while also requiring less training data. The paper, poster, and video can be seen on the paper page on the ISMIR website.

-

- Date: August 23, 2020

Where: European Conference on Computer Vision (ECCV), online, 2020

MERL Contact: Anoop Cherian

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Brief - MERL Principal Research Scientist Anoop Cherian gave an invited talk titled "Sound2Sight: Audio-Conditioned Visual Imagination" at the Multi-modal Video Analysis workshop held in conjunction with the European Conference on Computer Vision (ECCV), 2020. The talk was based on a recent ECCV paper that describes a new multimodal reasoning task called Sound2Sight and a generative adversarial machine learning algorithm for producing plausible video sequences conditioned on sound and visual context.

-

- Date: July 22, 2020

Where: Tokyo, Japan

MERL Contacts: Anoop Cherian; Chiori Hori; Jonathan Le Roux; Tim K. Marks; Anthony Vetro

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Speech & Audio

Brief - Mitsubishi Electric Corporation announced that the company has developed what it believes to be the world’s first technology capable of highly natural and intuitive interaction with humans based on a scene-aware capability to translate multimodal sensing information into natural language.

The novel technology, Scene-Aware Interaction, incorporates Mitsubishi Electric’s proprietary Maisart® compact AI technology to analyze multimodal sensing information for highly natural and intuitive interaction with humans through context-dependent generation of natural language. The technology recognizes contextual objects and events based on multimodal sensing information, such as images and video captured with cameras, audio information recorded with microphones, and localization information measured with LiDAR.

Scene-Aware Interaction for car navigation, one target application, will provide drivers with intuitive route guidance. The technology is also expected to have applicability to human-machine interfaces for in-vehicle infotainment, interaction with service robots in building and factory automation systems, systems that monitor the health and well-being of people, surveillance systems that interpret complex scenes for humans and encourage social distancing, support for touchless operation of equipment in public areas, and much more. The technology is based on recent research by MERL's Speech & Audio and Computer Vision groups.

-

- Date: July 10, 2020

Where: Virtual Baltimore, MD

MERL Contact: Jonathan Le Roux

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - MERL Senior Principal Research Scientist and Speech and Audio Senior Team Leader Jonathan Le Roux was invited by the Center for Language and Speech Processing at Johns Hopkins University to give a plenary lecture at the 2020 Frederick Jelinek Memorial Summer Workshop on Speech and Language Technology (JSALT). The talk, entitled "Deep Learning for Multifarious Speech Processing: Tackling Multiple Speakers, Microphones, and Languages", presented an overview of deep learning techniques developed at MERL towards the goal of cracking the Tower of Babel version of the cocktail party problem, that is, separating and/or recognizing the speech of multiple unknown speakers speaking simultaneously in multiple languages, in both single-channel and multi-channel scenarios: from deep clustering to chimera networks, phasebook and friends, and from seamless ASR to MIMO-Speech and Transformer-based multi-speaker ASR.

JSALT 2020 is the seventh in a series of six-week-long research workshops on Machine Learning for Speech Language and Computer Vision Technology. A continuation of the well known Johns Hopkins University summer workshops, these workshops bring together diverse "dream teams" of leading professionals, graduate students, and undergraduates, in a truly cooperative, intensive, and substantive effort to advance the state of the science. MERL researchers led such teams in the JSALT 2015 workshop, on "Far-Field Speech Enhancement and Recognition in Mismatched Settings", and the JSALT 2018 workshop, on "Multi-lingual End-to-End Speech Recognition for Incomplete Data".

-

- Date: June 22, 2020

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - We are excited to announce that Dr. Zhong-Qiu Wang, who recently obtained his Ph.D. from The Ohio State University, has joined MERL's Speech and Audio Team as a Visiting Research Scientist. Zhong-Qiu brings strong expertise in microphone array processing, speech enhancement, blind source/speaker separation, and robust automatic speech recognition, for which he has developed some of the most advanced machine learning and deep learning methods.

Prior to joining MERL, Zhong-Qiu received the B.Eng. degree in 2013 from Harbin Institute of Technology, Harbin, China, and the M.Sc. and Ph.D. degree in 2017 and 2020 from The Ohio State University, Columbus, USA, all in Computer Science. He was a summer research intern at Microsoft Research, Mitsubishi Electric Research Laboratories, and Google AI. He received a Best Student Paper Award at ICASSP 2018 for his work as an intern at MERL, and a Graduate Research Award from OSU Department of Computer Science and Engineering in 2020.

-

- Date: May 4, 2020 - May 8, 2020

Where: Virtual Barcelona

MERL Contacts: Karl Berntorp; Petros T. Boufounos; Chiori Hori; Toshiaki Koike-Akino; Jonathan Le Roux; Dehong Liu; Yanting Ma; Hassan Mansour; Philip V. Orlik; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Computational Sensing, Computer Vision, Machine Learning, Signal Processing, Speech & Audio

Brief - MERL researchers are presenting 13 papers at the IEEE International Conference on Acoustics, Speech & Signal Processing (ICASSP), which is being held virtually from May 4-8, 2020. Petros Boufounos is also presenting a talk on the Computational Sensing Revolution in Array Processing (video) in ICASSP’s Industry Track, and Siheng Chen is co-organizing and chairing a special session on a Signal-Processing View of Graph Neural Networks.

Topics to be presented include recent advances in speech recognition, audio processing, scene understanding, computational sensing, array processing, and parameter estimation. Videos for all talks are available on MERL's YouTube channel, with corresponding links in the references below.

This year again, MERL is a sponsor of the conference and will be participating in the Student Job Fair; please join us to learn about our internship program and career opportunities.

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 2000 participants each year. Originally planned to be held in Barcelona, Spain, ICASSP has moved to a fully virtual setting due to the COVID-19 crisis, with free registration for participants not covering a paper.

-

- Date: December 18, 2019

Awarded to: Xuankai Chang, Wangyou Zhang, Yanmin Qian, Jonathan Le Roux, Shinji Watanabe

MERL Contact: Jonathan Le Roux

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - MERL researcher Jonathan Le Roux and co-authors Xuankai Chang, Shinji Watanabe (Johns Hopkins University), Wangyou Zhang, and Yanmin Qian (Shanghai Jiao Tong University) won the Best Paper Award at the 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2019), for the paper "MIMO-Speech: End-to-End Multi-Channel Multi-Speaker Speech Recognition". MIMO-Speech is a fully neural end-to-end framework that can transcribe the text of multiple speakers speaking simultaneously from multi-channel input. The system is comprised of a monaural masking network, a multi-source neural beamformer, and a multi-output speech recognition model, which are jointly optimized only via an automatic speech recognition (ASR) criterion. The award was received by lead author Xuankai Chang during the conference, which was held in Sentosa, Singapore from December 14-18, 2019.

-

- Date: November 9, 2019

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - Takaaki Hori has been elected to serve on the Speech and Language Processing Technical Committee (SLTC) of the IEEE Signal Processing Society for a 3-year term.

The SLTC promotes and influences all the technical areas of speech and language processing such as speech recognition, speech synthesis, spoken language understanding, speech to speech translation, spoken dialog management, speech indexing, information extraction from audio, and speaker and language recognition.

-

- Date: September 15, 2019 - September 19, 2019

Where: Graz, Austria

MERL Contacts: Chiori Hori; Jonathan Le Roux; Gordon Wichern

Research Areas: Artificial Intelligence, Machine Learning, Speech & Audio

Brief - MERL Speech & Audio Team researchers will be presenting 7 papers at the 20th Annual Conference of the International Speech Communication Association INTERSPEECH 2019, which is being held in Graz, Austria from September 15-19, 2019. Topics to be presented include recent advances in end-to-end speech recognition, speech separation, and audio-visual scene-aware dialog. Takaaki Hori is also co-presenting a tutorial on end-to-end speech processing.

Interspeech is the world's largest and most comprehensive conference on the science and technology of spoken language processing. It gathers around 2000 participants from all over the world.

-

- Date: May 12, 2019 - May 17, 2019

Where: Brighton, UK

MERL Contacts: Petros T. Boufounos; Anoop Cherian; Chiori Hori; Toshiaki Koike-Akino; Jonathan Le Roux; Dehong Liu; Hassan Mansour; Tim K. Marks; Philip V. Orlik; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Computational Sensing, Computer Vision, Machine Learning, Signal Processing, Speech & Audio

Brief - MERL researchers will be presenting 16 papers at the IEEE International Conference on Acoustics, Speech & Signal Processing (ICASSP), which is being held in Brighton, UK from May 12-17, 2019. Topics to be presented include recent advances in speech recognition, audio processing, scene understanding, computational sensing, and parameter estimation. MERL is also a sponsor of the conference and will be participating in the student career luncheon; please join us at the lunch to learn about our internship program and career opportunities.

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 2000 participants each year.

-

- Date: February 13, 2019

Where: Tokyo, Japan

MERL Contacts: Jonathan Le Roux; Gordon Wichern

Research Area: Speech & Audio

Brief - Mitsubishi Electric Corporation announced that it has developed the world's first technology capable of highly accurate multilingual speech recognition without being informed which language is being spoken. The novel technology, Seamless Speech Recognition, incorporates Mitsubishi Electric's proprietary Maisart compact AI technology and is built on a single system that can simultaneously identify and understand spoken languages. In tests involving 5 languages, the system achieved recognition with over 90 percent accuracy, without being informed which language was being spoken. When incorporating 5 more languages with lower resources, accuracy remained above 80 percent. The technology can also understand multiple people speaking either the same or different languages simultaneously. A live demonstration involving a multilingual airport guidance system took place on February 13 in Tokyo, Japan. It was widely covered by the Japanese media, with reports by all six main Japanese TV stations and multiple articles in print and online newspapers, including in Japan's top newspaper, Asahi Shimbun. The technology is based on recent research by MERL's Speech and Audio team.

Link:

Mitsubishi Electric Corporation Press Release

Media Coverage:

NHK, News (Japanese)

NHK World, News (English), video report (starting at 4'38")

TV Asahi, ANN news (Japanese)

Nippon TV, News24 (Japanese)

Fuji TV, Prime News Alpha (Japanese)

TV Tokyo, World Business Satellite (Japanese)

TV Tokyo, Morning Satellite (Japanese)

TBS, News, N Studio (Japanese)

The Asahi Shimbun (Japanese)

The Nikkei Shimbun (Japanese)

Nikkei xTech (Japanese)

Response (Japanese).

-

- Date & Time: Thursday, November 29, 2018; 4-6pm

Location: 201 Broadway, 8th floor, Cambridge, MA

MERL Contacts: Elizabeth Phillips; Anthony Vetro

Research Areas: Applied Physics, Artificial Intelligence, Communications, Computational Sensing, Computer Vision, Control, Data Analytics, Dynamical Systems, Electric Systems, Electronic and Photonic Devices, Machine Learning, Multi-Physical Modeling, Optimization, Robotics, Signal Processing, Speech & Audio

Brief - Snacks, demos, science: On Thursday 11/29, Mitsubishi Electric Research Labs (MERL) will host an open house for graduate+ students interested in internships, post-docs, and research scientist positions. The event will be held from 4-6pm and will feature demos & short presentations in our main areas of research including artificial intelligence, robotics, computer vision, speech processing, optimization, machine learning, data analytics, signal processing, communications, sensing, control and dynamical systems, as well as multi-physyical modeling and electronic devices. MERL is a high impact publication-oriented research lab with very extensive internship and university collaboration programs. Most internships lead to publication; many of our interns and staff have gone on to notable careers at MERL and in academia. Come mix with our researchers, see our state of the art technologies, and learn about our research opportunities. Dress code: casual, with resumes.

Pre-registration for the event is strongly encouraged:

merlopenhouse.eventbrite.com

Current internship and employment openings:

www.merl.com/internship/openings

www.merl.com/employment/employment

Information about working at MERL:

www.merl.com/employment.

-

- Date: Thursday, October 18, 2018

Location: Google, Cambridge, MA

MERL Contact: Jonathan Le Roux

Research Area: Speech & Audio

Brief - SANE 2018, a one-day event gathering researchers and students in speech and audio from the Northeast of the American continent, will be held on Thursday October 18, 2018 at Google, in Cambridge, MA. MERL is one of the organizers and sponsors of the workshop.

It is the 7th edition in the SANE series of workshops, which started at MERL in 2012. Since the first edition, the audience has steadily grown, with a record 180 participants in 2017.

SANE 2018 will feature invited talks by leading researchers from the Northeast, as well as from the international community. It will also feature a lively poster session, open to both students and researchers.

-

- Date: June 25, 2018 - August 3, 2018

Where: Johns Hopkins University, Baltimore, MD

MERL Contact: Jonathan Le Roux

Research Area: Speech & Audio

Brief - MERL Speech & Audio Team researcher Takaaki Hori led a team of 27 senior researchers and Ph.D. students from different organizations around the world, working on "Multi-lingual End-to-End Speech Recognition for Incomplete Data" as part of the Jelinek Memorial Summer Workshop on Speech and Language Technology (JSALT). The JSALT workshop is a renowned 6-week hands-on workshop held yearly since 1995. This year, the workshop was held at Johns Hopkins University in Baltimore from June 25 to August 3, 2018. Takaaki's team developed new methods for end-to-end Automatic Speech Recognition (ASR) with a focus on low-resource languages with limited labelled data.

End-to-end ASR can significantly reduce the burden of developing ASR systems for new languages, by eliminating the need for linguistic information such as pronunciation dictionaries. Some end-to-end systems have recently achieved performance comparable to or better than conventional systems in several tasks. However, the current model training algorithms basically require paired data, i.e., speech data and the corresponding transcription. Sufficient amount of such complete data is usually unavailable for minor languages, and creating such data sets is very expensive and time consuming.

The goal of Takaaki's team project was to expand the applicability of end-to-end models to multilingual ASR, and to develop new technology that would make it possible to build highly accurate systems even for low-resource languages without a large amount of paired data. Some major accomplishments of the team include building multi-lingual end-to-end ASR systems for 17 languages, developing novel architectures and training methods for end-to-end ASR, building end-to-end ASR-TTS (Text-to-speech) chain for unpaired data training, and developing ESPnet, an open-source end-to-end speech processing toolkit. Three papers stemming from the team's work have already been accepted to the 2018 IEEE Spoken Language Technology Workshop (SLT), with several more to be submitted to upcoming conferences.

-

- Date: April 17, 2018

Awarded to: Zhong-Qiu Wang

MERL Contact: Jonathan Le Roux

Research Area: Speech & Audio

Brief - Former MERL intern Zhong-Qiu Wang (Ph.D. Candidate at Ohio State University) has received a Best Student Paper Award at the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP 2018) for the paper "Multi-Channel Deep Clustering: Discriminative Spectral and Spatial Embeddings for Speaker-Independent Speech Separation" by Zhong-Qiu Wang, Jonathan Le Roux, and John Hershey. The paper presents work performed during Zhong-Qiu's internship at MERL in the summer 2017, extending MERL's pioneering Deep Clustering framework for speech separation to a multi-channel setup. The award was received on behalf on Zhong-Qiu by MERL researcher and co-author Jonathan Le Roux during the conference, held in Calgary April 15-20.

-

- Date: April 15, 2018 - April 20, 2018

Where: Calgary, AB

MERL Contacts: Petros T. Boufounos; Toshiaki Koike-Akino; Jonathan Le Roux; Dehong Liu; Hassan Mansour; Philip V. Orlik; Pu (Perry) Wang

Research Areas: Computational Sensing, Digital Video, Speech & Audio

Brief - MERL researchers are presenting 9 papers at the IEEE International Conference on Acoustics, Speech & Signal Processing (ICASSP), which is being held in Calgary from April 15-20, 2018. Topics to be presented include recent advances in speech recognition, audio processing, and computational sensing. MERL is also a sponsor of the conference.

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 2000 participants each year.

-

- Date & Time: Tuesday, March 6, 2018; 12:00 PM

Speaker: Scott Wisdom, Affectiva

MERL Host: Jonathan Le Roux

Research Area: Speech & Audio

Abstract  Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

In particular, I will show how RNNs with rectified linear units and residual connections are a particular deep unfolding of a sequential version of the iterative shrinkage-thresholding algorithm (ISTA), a simple and classic algorithm for solving L1-regularized least-squares. This equivalence allows interpretation of state-of-the-art unitary RNNs (uRNNs) as an unfolded sparse coding algorithm. I will also describe a new type of RNN architecture called deep recurrent nonnegative matrix factorization (DR-NMF). DR-NMF is an unfolding of a sparse NMF model of nonnegative spectrograms for audio source separation. Both of these networks outperform conventional LSTM networks while also providing interpretability for practitioners.

-

- Date: February 5, 2018

Where: National Public Radio (NPR)

MERL Contact: Jonathan Le Roux

Research Area: Speech & Audio

Brief - MERL's speech separation technology was featured in NPR's All Things Considered, as part of an episode of All Tech Considered on artificial intelligence, "Can Computers Learn Like Humans?". An example separating the overlapped speech of two of the show's hosts was played on the air.

The technology is based on a proprietary deep learning method called Deep Clustering. It is the world's first technology that separates in real time the simultaneous speech of multiple unknown speakers recorded with a single microphone. It is a key step towards building machines that can interact in noisy environments, in the same way that humans can have meaningful conversations in the presence of many other conversations.

A live demonstration was featured in Mitsubishi Electric Corporation's Annual R&D Open House last year, and was also covered in international media at the time.

(Photo credit: Sam Rowe for NPR)

Link:

"Can Computers Learn Like Humans?" (NPR, All Things Considered)

MERL Deep Clustering Demo.

-

- Date & Time: Friday, February 2, 2018; 12:00

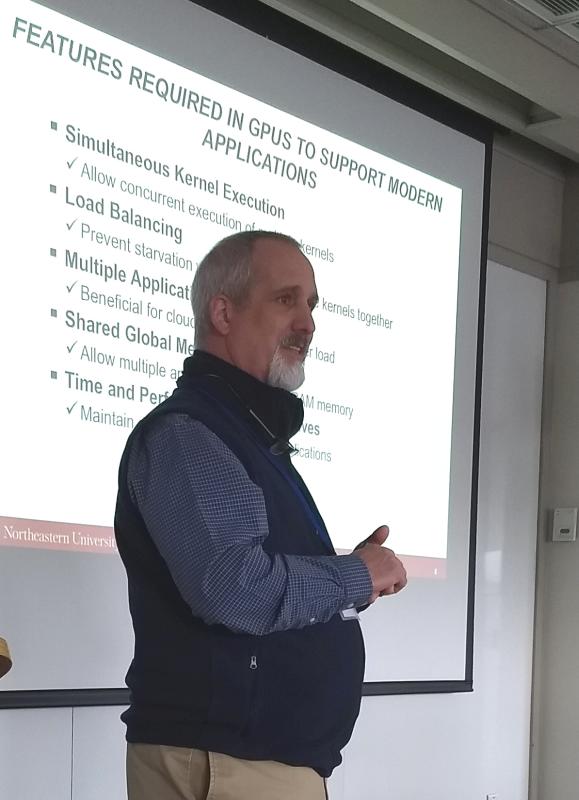

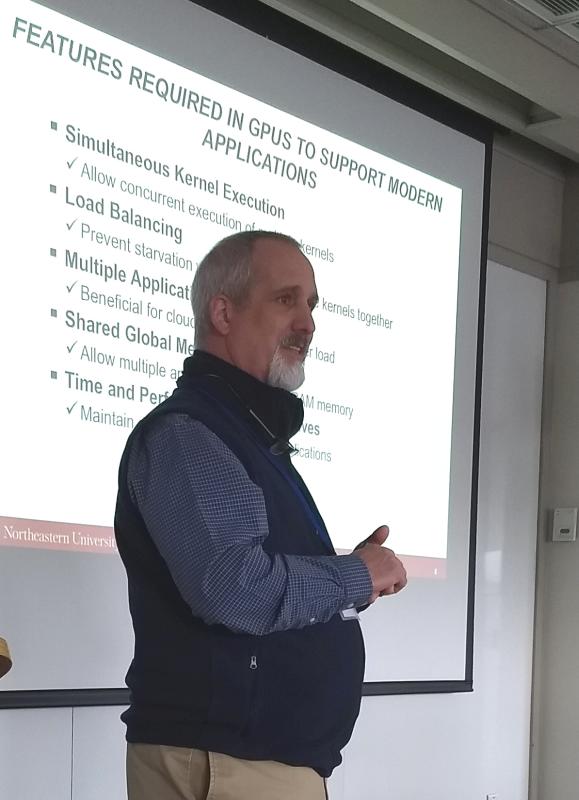

Speaker: Dr. David Kaeli, Northeastern University

MERL Host: Abraham Goldsmith

Research Areas: Control, Optimization, Machine Learning, Speech & Audio

Abstract  GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

-

- Date: January 31, 2018

MERL Contact: Chiori Hori

Research Area: Speech & Audio

Brief - Chiori Hori has been elected to serve on the Speech and Language Processing Technical Committee (SLTC) of the IEEE Signal Processing Society for a 3-year term.

The SLTC promotes and influences all the technical areas of speech and language processing such as speech recognition, speech synthesis, spoken language understanding, speech to speech translation, spoken dialog management, speech indexing, information extraction from audio, and speaker and language recognition.

-

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics. Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn. GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.