TR2012-044

Changedetection.net: A New Change Detection Benchmark Dataset

-

- , "Changedetection.net: A New Change Detection Benchmark Dataset", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPRW.2012.6238919, June 2012, pp. 1-8.BibTeX TR2012-044 PDF

- @inproceedings{Goyette2012jun,

- author = {Goyette, N. and Jodoin, P.-M. and Porikli, F. and Konrad, J. and Ishwar, P.},

- title = {Changedetection.net: A New Change Detection Benchmark Dataset},

- booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2012,

- pages = {1--8},

- month = jun,

- doi = {10.1109/CVPRW.2012.6238919},

- url = {https://www.merl.com/publications/TR2012-044}

- }

- , "Changedetection.net: A New Change Detection Benchmark Dataset", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPRW.2012.6238919, June 2012, pp. 1-8.

-

Research Area:

Abstract:

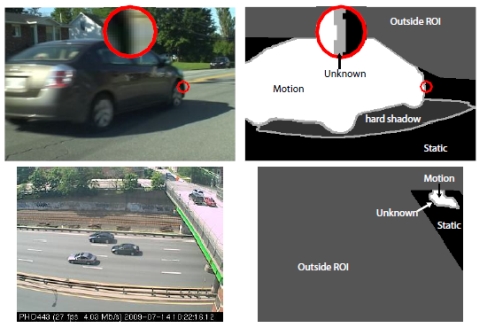

Change detection is one of the most commonly encountered low-level tasks in computer vision and video processing. A plethora of algorithms have been developed to date, yet no widely accepted, realistic, large-scale video dataset exists for benchmarking different methods. Presented here is a unique change detection benchmark dataset consisting of nearly 90,000 frames in 31 video sequences representing 6 categories selected to cover a wide range of challenges in 2 modalities (color and thermal IR). A distinguishing characteristic of this dataset is that each frame is meticulously annotated for ground-truth foreground, background, and shadow area boundaries - an effort that goes much beyond a simple binary label denoting the presence of change. This enables objective and precise quantitative comparison and ranking of change detection algorithms. This paper presents and discusses various aspects of the new dataset, quantitative performance metrics used, and comparative results for over a dozen previous and new change detection algorithms. The dataset, evaluation tools, and algorithm rankings are available to the public on a website1 and will be updated with feedback from academia and industry in the future.

Related News & Events

-

NEWS CVPR 2012: 4 publications by Yuichi Taguchi, Srikumar Ramalingam and Amit K. Agrawal Date: June 16, 2012

Where: IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Research Area: Computer VisionBrief- The papers "Connecting the Dots in Multi-Class Classification: From Nearest Subspace to Collaborative Representation" by Chi, Y. and Porikli, F., "Decomposing Global Light Transport using Time of Flight Imaging" by Wu, D., O'Toole, M., Velten, A., Agrawal, A. and Raskar, R., "Changedetection.net: A New Change Detection Benchmark Dataset" by Goyette, N., Jodoin, P.-M., Porikli, F., Konrad, J. and Ishwar, P. and "A Theory of Multi-Layer Flat Refractive Geometry" by Agrawal, A., Ramalingam, S., Taguchi, Y. and Chari, V. were presented at the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).