TR2012-032

Voting-based Pose Estimation for Robotic Assembly Using a 3D Sensor

-

- , "Voting-based Pose Estimation for Robotic Assembly Using a 3D Sensor", IEEE International Conference on Robotics and Automation (ICRA), DOI: 10.1109/ICRA.2012.6225371, May 2012, pp. 1724-1731.BibTeX TR2012-032 PDF

- @inproceedings{Choi2012may,

- author = {Choi, C. and Taguchi, Y. and Tuzel, O. and Liu, M.-Y. and Ramalingam, S.},

- title = {Voting-based Pose Estimation for Robotic Assembly Using a 3D Sensor},

- booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

- year = 2012,

- pages = {1724--1731},

- month = may,

- doi = {10.1109/ICRA.2012.6225371},

- url = {https://www.merl.com/publications/TR2012-032}

- }

- , "Voting-based Pose Estimation for Robotic Assembly Using a 3D Sensor", IEEE International Conference on Robotics and Automation (ICRA), DOI: 10.1109/ICRA.2012.6225371, May 2012, pp. 1724-1731.

-

Research Areas:

Abstract:

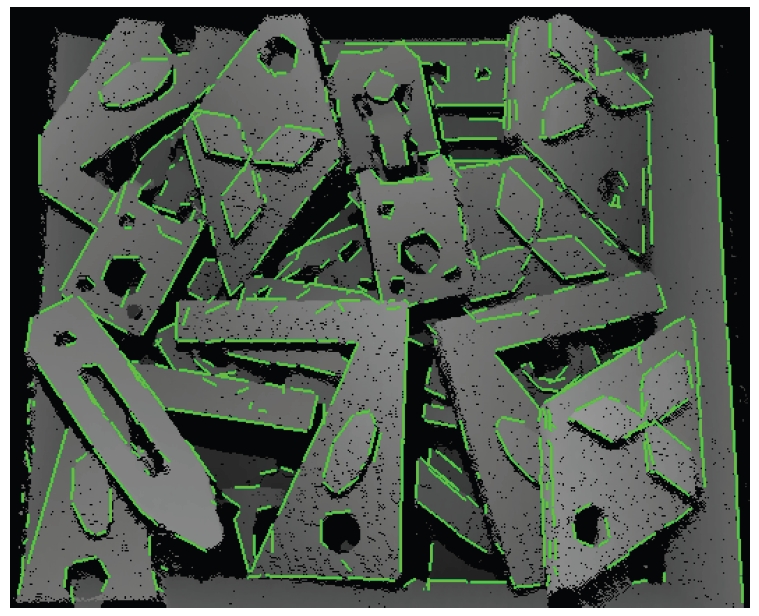

We propose a voting-based pose estimation algorithm applicable to 3D sensors, which are fast replacing their 2D counterparts in many robotics, computer vision, and gaming applications. It was recently shown that a pair of oriented 3D points, which are points on the object surface with normals, in a voting framework enables fast and robust pose estimation. Although oriented surface points are discriminative for objects with sufficient curvature changes, they are not compact and discriminative enough for many industrial and real-world objects that are mostly planar. As edges play the key role in 2D registration, depth discontinuities are crucial in 3D. In this paper, we investigate and develop a family of pose estimation algorithms that better exploit this boundary information. In addition to oriented surface points, we use two other primitives: boundary points with directions and boundary line segments. Our experiments show that these carefully chosen primitives encode more information compactly and thereby provide higher accuracy for a wide class of industrial parts and enable faster computation. We demonstrate a practical robotic bin-picking system using the proposed algorithm and a 3D sensor.

Related News & Events

-

NEWS MERL researcher, Oncel Tuzel, gives keynote talk at 2016 International Symposium on Visual Computing Date: December 14, 2015 - December 16, 2015

Where: Las Vegas, NV, USA

Research Area: Machine LearningBrief- MERL researcher, Oncel Tuzel, gave a keynote talk at 2016 International Symposium on Visual Computing in Las Vegas, Dec. 16, 2015. The talk was titled: "Machine vision for robotic bin-picking: Sensors and algorithms" and reviewed MERL's research in the application of 2D and 3D sensing and machine learning to the problem of general pose estimation.

The talk abstract was: For over four years, at MERL, we have worked on the robot "bin-picking" problem: using a 2D or 3D camera to look into a bin of parts and determine the pose, 3D rotation and translation, of a good candidate to pick up. We have solved the problem several different ways with several different sensors. I will briefly describe the sensors and the algorithms. In the first half of the talk, I will describe the Multi-Flash camera, a 2D camera with 8 flashes, and explain how this inexpensive camera design is used to extract robust geometric features, depth edges and specular edges, from the parts in a cluttered bin. I will present two pose estimation algorithms, (1) Fast directional chamfer matching--a sub-linear time line matching algorithm and (2) specular line reconstruction, for fast and robust pose estimation of parts with different surface characteristics. In the second half of the talk, I will present a voting-based pose estimation algorithm applicable to 3D sensors. We represent three-dimensional objects using a set of oriented point pair features: surface points with normals and boundary points with directions. I will describe a max-margin learning framework to identify discriminative features on the surface of the objects. The algorithm selects and ranks features according to their importance for the specified task which leads to improved accuracy and reduced computational cost.

- MERL researcher, Oncel Tuzel, gave a keynote talk at 2016 International Symposium on Visual Computing in Las Vegas, Dec. 16, 2015. The talk was titled: "Machine vision for robotic bin-picking: Sensors and algorithms" and reviewed MERL's research in the application of 2D and 3D sensing and machine learning to the problem of general pose estimation.

-

NEWS ICRA 2012: 3 publications by Yuichi Taguchi, Srikumar Ramalingam, Amit K. Agrawal, C. Oncel Tuzel and Ming-Yu Liu Date: May 14, 2012

Where: IEEE International Conference on Robotics and Automation (ICRA)

Research Area: Computer VisionBrief- The papers "Voting-based Pose Estimation for Robotic Assembly Using a 3D Sensor" by Choi, C., Taguchi, Y., Tuzel, O., Liu, M.-Y. and Ramalingam, S., "Convex Bricks: A New Primitive for Visual Hull Modeling and Reconstruction" by Chari, V., Agrawal, A., Taguchi, Y. and Ramalingam, S. and "Coverage Optimized Active Learning for k - NN Classifiers" by Joshi, A.J., Porikli, F. and Papanikolopoulos, N. were presented at the IEEE International Conference on Robotics and Automation (ICRA).