TR2011-081

Construction of Embedded Markov Decision Processes for Optimal Control of Non-Linear Systems with Continuous State Spaces

-

- , "Construction of Embedded Markov Decision Processes for Optimal Control of Non-Linear Systems with Continuous State Spaces", IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), DOI: 10.1109/CDC.2011.6161310, December 2011, pp. 7944-7949.BibTeX TR2011-081 PDF

- @inproceedings{Nikovski2011dec,

- author = {Nikovski, D. and Esenther, A.},

- title = {Construction of Embedded Markov Decision Processes for Optimal Control of Non-Linear Systems with Continuous State Spaces},

- booktitle = {IEEE Conference on Decision and Control and European Control Conference (CDC-ECC)},

- year = 2011,

- pages = {7944--7949},

- month = dec,

- doi = {10.1109/CDC.2011.6161310},

- url = {https://www.merl.com/publications/TR2011-081}

- }

- , "Construction of Embedded Markov Decision Processes for Optimal Control of Non-Linear Systems with Continuous State Spaces", IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), DOI: 10.1109/CDC.2011.6161310, December 2011, pp. 7944-7949.

-

MERL Contact:

-

Research Areas:

Abstract:

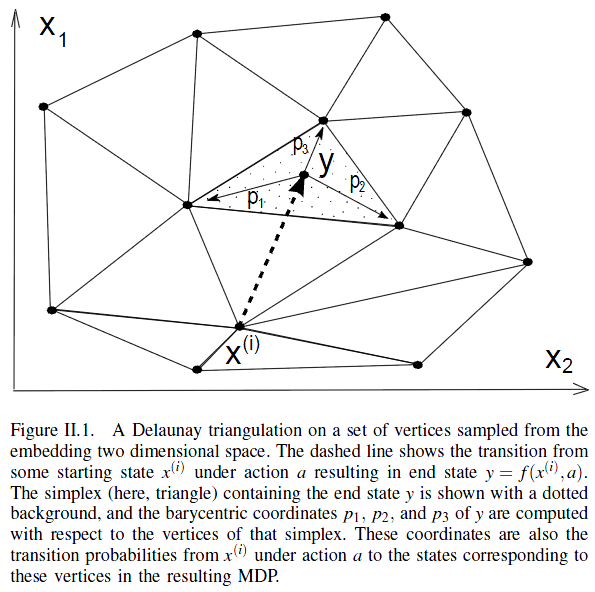

We consider the problem of constructing a suitable discrete-state approximation of an arbitrary non-linear dynamical system with continuous state space and discrete control actions that would allow close to optimal sequential control of that system by means of value or policy iteration on the approximated model. We propose a method for approximating the continuous dynamics by means of an embedded Markov decision process (MDP) model defined over an arbitrary set of discrete states sampled from the original continuous state space. The mathematical similarity between sets of barycentric coordinates (convex combination) and probability mass functions is exploited to compute the transition matrices and initial state distribution of the MDP. Barycentric coordinates are computed efficiently on a Delaunay triangulation of the set of discrete states, ensuring maximal accuracy of the approximation and the resulting control policy.

Related News & Events

-

NEWS CDC-ECC 2011: publication by Alan W. Esenther and Daniel N. Nikovski Date: December 12, 2011

Where: IEEE Conference on Decision and Control and European Control Conference (CDC-ECC)

MERL Contact: Daniel N. Nikovski

Research Area: OptimizationBrief- The paper "Construction of Embedded Markov Decision Processes for Optimal Control of Non-Linear Systems with Continuous State Spaces" by Nikovski, D. and Esenther, A. was presented at the IEEE Conference on Decision and Control and European Control Conference (CDC-ECC).