TR2009-009

View Synthesis Prediction for Multiview Video Coding

-

- , "View Synthesis Prediction for Multiview Video Coding", Image Communication, Vol. 24, No. 1-2, pp. 89-100, January 2009.BibTeX TR2009-009 PDF

- @article{Yea2009jan,

- author = {Yea, S. and Vetro, A.},

- title = {View Synthesis Prediction for Multiview Video Coding},

- journal = {Image Communication},

- year = 2009,

- volume = 24,

- number = {1-2},

- pages = {89--100},

- month = jan,

- issn = {0923-5965},

- url = {https://www.merl.com/publications/TR2009-009}

- }

- , "View Synthesis Prediction for Multiview Video Coding", Image Communication, Vol. 24, No. 1-2, pp. 89-100, January 2009.

-

MERL Contact:

-

Research Area:

Digital Video

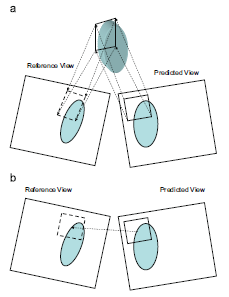

prediction (VSP, a).

Abstract:

We propose a rate-distortion-optimized framework that incorporates view synthesis for improved prediction in multiview video coding. In the proposed scheme, auxiliary information, including depth data, is encoded and used at the decoder to generate the view synthesis prediction data. The proposed method employs optimal mode decision including view synthesis prediction, and sub-pixel reference matching to improve prediction accuracy of the view synthesis prediction. Novel variants of the skip and direct modes are also presented, which infer the depth and correction vector information from neighboring blocks in a synthesized reference picture to reduce the bits needed for the view synthesis prediction mode. We demonstrate two multiview video coding scenarios in which view synthesis prediction is employed. In the first scenario, the goal is to improved the coding efficiency of multiview video where block-based depths and correction vectors are encoded by CABAC in a lossless manner on a macroblock basis. A variable block-size depth/motion search algorithm is described. Experimental results demonstrate that view synthesis prediction does provide some coding gains when combined with disparity-compensated prediction. In the second scenario, the goal is to use view synthesis prediction for reducing rate overhead incurred by transmitting depth maps for improved support of 3DTV and free-viewpoint video applications. It is assumed that the complete depth map for each view is encoded separately from the multiview video and used at the receiver to generate intermediate views. We utilize this information for view synthesis prediction to improve overall coding efficiency. Experimental results show that the rate overhead incurred by coding depth maps of varying quality could be offset by utilizing the proposed view synthesis prediction techniques to reduce the bitrate required for coding multiview video.

Related News & Events

-

NEWS Image Communication: publication by Anthony Vetro and Sehoon Yea Date: January 15, 2009

Where: Image Communication

MERL Contact: Anthony Vetro

Research Area: Digital VideoBrief- The article "View Synthesis Prediction for Multiview Video Coding" by Yea, S. and Vetro, A. was published in Image Communication.